Almost everyone in machine learning has heard about gradient boosting. The reason why we use this in gradient boost is because, when we differentiate it with respect to “predicted”, and use the chain rule we get:

Why Is Gradient Boosting Good, Shallow trees) can together make a more accurate predictor. Most people who work in data science and machine learning will know that gradient boosting is one of the most powerful and effective algorithms out there.

In gradient boosting, why are new trees, fit to the gradient of loss function instead of residual Many data scientists include this algorithm in their data scientist’s toolbox because of the good results it yields on any given (unknown) problem. The first guess we make is. This is the core of gradient boosting and allows many simple learners to compensate for each other’s weaknesses to better fit the data.

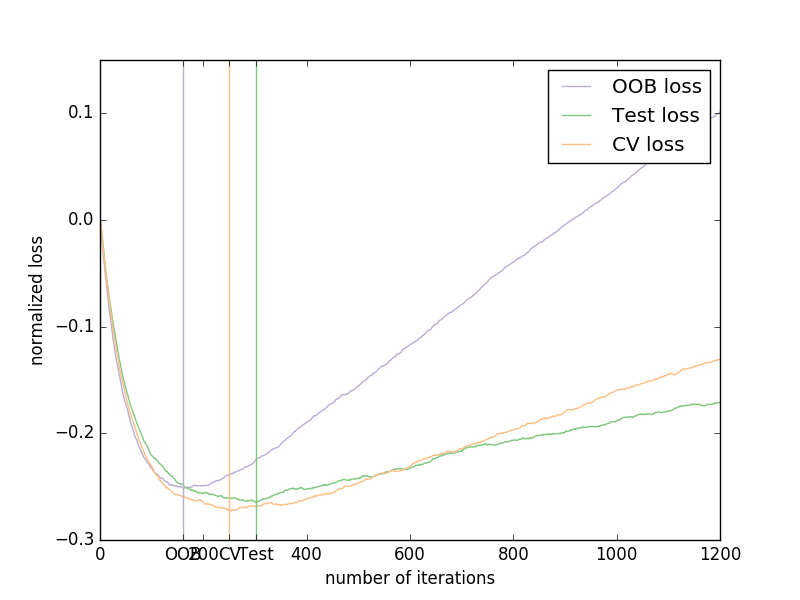

From Decision Trees and Random Forests to Gradient Boosting by Robby Furthermore, xgboost is often the standard recipe for winning ml competitions. It turns out that this case of gradient boosting is the solution when you try to optimize for mse (mean squared error) loss. A quite common figure regarding overfit is the following: Gradient boosting models are greedy algorithms that are prone to overfitting on a dataset. But gradient boosting.

Introduction to Extreme Gradient Boosting in Exploratory by Kan A quite common figure regarding overfit is the following: Almost everyone in machine learning has heard about gradient boosting. Shallow trees) can together make a more accurate predictor. Extreme gradient boosting (xgboost) xgboost is one of the most popular variants of gradient boosting. In the definition above, we trained the additional models only on the residuals.

Gradient Boosting Machine Learning Model MOCHINV It gives a prediction model in the form of an ensemble of weak prediction models, which are typically decision trees. It applies mostly to neural network, where the abscissa represents the number of epochs, the blue line the training loss and the red line the validation loss. A boosting model is an additive model. The thing to observe is that.

How Gradient boosting can be more interpretable than CART? Cross Gradient boosting is a machine learning algorithm, used for both classification and regression problems. Gradient boosting models are greedy algorithms that are prone to overfitting on a dataset. One of the biggest motivations of using gradient boosting is that it allows one to optimise a user specified cost function, instead of a loss function that usually offers less control and.

How to explain gradient boosting Gradient boosting is a machine learning algorithm, used for both classification and regression problems. “all in all, gradient boosting with probabilistic outputs can be fairly helpful in case you need to assess the noise in your target variable.” let me know in the comments if you have any questions or feedback in general. In gradient boosting, why are new trees,.

Gradient Boosting from scratch. Simplifying a complex algorithm by Why does gradient boosting generally outperform random forests? Gradient boosting, as a concept, was originated by leo breiman. Gbms can be regulated with four different methods: It turns out that this case of gradient boosting is the solution when you try to optimize for mse (mean squared error) loss. A similar algorithm is used for classification known as gradientboostingclassifier.

Calibration plots of the light gradient boosting machine (A), extreme Each successive model attempts to correct for the shortcomings of the combined boosted ensemble of all previous models. It means that the final output is a weighted sum of basis functions (shallow decision trees in the case of gradient tree boosting). Gradient boosting is one of the most popular machine learning algorithms for tabular datasets. Extreme gradient boosting (xgboost) xgboost.

Gradient Boosting OutofBag estimates — scikitlearn 0.17 文档 Then you repeat this process of boosting many times. It usually outperforms random forest. Gradient boosting models are greedy algorithms that are prone to overfitting on a dataset. In this article we�ll focus on gradient boosting for classification problems. A boosting model is an additive model.

Gradient boosting explained But gradient boosting is agnostic of the type of loss function. Why does gradient boosting generally outperform random forests? In gradient boosting, why are new trees, fit to the gradient of loss function instead of residual This is the core of gradient boosting and allows many simple learners to compensate for each other’s weaknesses to better fit the data. Many.

Gradient Boosting Machines · UC Business Analytics R Programming Guide This performs on the dataset where very minimal effort gets spent on cleaning. Each successive model attempts to correct for the shortcomings of the combined boosted ensemble of all previous models. A benefit of the gradient boosting framework is that a new boosting algorithm does not have to be derived for each loss function that may want to be used,.

Comparison of mainstream gradient boosting algorithms Develop Paper Gbms can be regulated with four different methods: It usually outperforms random forest. It applies mostly to neural network, where the abscissa represents the number of epochs, the blue line the training loss and the red line the validation loss. A quite common figure regarding overfit is the following: It relies on the intuition that the best possible next model,.

Gradient Boosting Machines · UC Business Analytics R Programming Guide It continues to be one of the most successful ml techniques in kaggle comps and is widely used in practice across a variety of use cases. Almost everyone in machine learning has heard about gradient boosting. There are some variants of gradient boosting and a few of them are briefly explained in the coming sections. It is so popular that.

Gradient boosting Vs AdaBoosting — Simplest explanation of how to do It gives a prediction model in the form of an ensemble of weak prediction models, which are typically decision trees. The first guess we make is. It relies on the intuition that the best possible next model, when combined with previous. It continues to be one of the most successful ml techniques in kaggle comps and is widely used in.

python Gradient Boosting with a OLS Base Learner Stack Overflow This performs on the dataset where very minimal effort gets spent on cleaning. The reason why we use this in gradient boost is because, when we differentiate it with respect to “predicted”, and use the chain rule we get: The thing to observe is that the motivation behind random forest and. One of the biggest motivations of using gradient boosting.

machine learning Why are gradient boosting regression trees good It is powerful enough to find any nonlinear relationship between your model target and features and has great usability that can deal with missing values, outliers, and high cardinality categorical values on your features without any special treatment. Because of this, 2b reduces to 2a in principle. Gradient boosting is a machine learning technique used in regression and classification tasks,.

Testing phase gradient boosting (GB) selected by virtue of its F1 Each successive model attempts to correct for the shortcomings of the combined boosted ensemble of all previous models. Here there is an example of ensemble of simple trees (stumps) for regression. Shrinkage, tree constraints, stochastic gradient boosting, and penalized. It gives a prediction model in the form of an ensemble of weak prediction models, which are typically decision trees. “all.

Gradient Boosting Explained GormAnalysis Furthermore, xgboost is often the standard recipe for winning ml competitions. Gradient boosting is a type of machine learning boosting. It relies on the intuition that the best possible next model, when combined with previous. Variations on gradient boosting models. Gradient boosting is a machine learning algorithm, used for both classification and regression problems.

Random forest VS Gradient boosting Gbms can be regulated with four different methods: It relies on the intuition that the best possible next model, when combined with previous. A boosting model is an additive model. The first guess we make is. In gradient boosting, why are new trees, fit to the gradient of loss function instead of residual

Gradient Boosting speed on linear models with 10000+ features fast Gradient boosting is a machine learning algorithm, used for both classification and regression problems. It relies on the intuition that the best possible next model, when combined with previous. A boosting model is an additive model. The same question applies to gradient boosting, where the number of trees if quite critical and could replace the abscissa on the. It works.

Gradient Boosting from scratch. Simplifying a complex algorithm by It continues to be one of the most successful ml techniques in kaggle comps and is widely used in practice across a variety of use cases. Python code for gradient boosting regressor # import models and utility functions. Most people who work in data science and machine learning will know that gradient boosting is one of the most powerful and.

Interactive demonstrations for ML courses A boosting model is an additive model. Shrinkage, tree constraints, stochastic gradient boosting, and penalized. It usually outperforms random forest. The first guess we make is. The reason why we use this in gradient boost is because, when we differentiate it with respect to “predicted”, and use the chain rule we get:

Gradient Boosting from Almost Scratch by John Clements Towards Data Furthermore, xgboost is often the standard recipe for winning ml competitions. Python code for gradient boosting regressor # import models and utility functions. Most people who work in data science and machine learning will know that gradient boosting is one of the most powerful and effective algorithms out there. It relies on the intuition that the best possible next model,.

Introduction to gradient boosting on decision trees with Catboost Then you repeat this process of boosting many times. But gradient boosting is agnostic of the type of loss function. It gives a prediction model in the form of an ensemble of weak prediction models, which are typically decision trees. Shrinkage, tree constraints, stochastic gradient boosting, and penalized. It is so popular that the idea of stacking xgboosts has become.

dataset What are examples for XOR, parity and multiplexer problems in The same question applies to gradient boosting, where the number of trees if quite critical and could replace the abscissa on the. A boosting model is an additive model. The combination of these two models is expected to be better than either model alone. Gradient boosting is a type of machine learning boosting. Many data scientists include this algorithm in.

Implementing Gradient Boosting Regression in Python Paperspace Blog Shallow trees) can together make a more accurate predictor. 2/2 * — (observed — predicted) It turns out that this case of gradient boosting is the solution when you try to optimize for mse (mean squared error) loss. We do not have full theoretical analysis of it, so this answer is more about intuition rather than provable analysis. The reason.

The reason why we use this in gradient boost is because, when we differentiate it with respect to “predicted”, and use the chain rule we get: Implementing Gradient Boosting Regression in Python Paperspace Blog.

Gradient boosting is a machine learning algorithm, used for both classification and regression problems. Why is it called gradient boosting? This performs on the dataset where very minimal effort gets spent on cleaning. Then you repeat this process of boosting many times. Many data scientists include this algorithm in their data scientist’s toolbox because of the good results it yields on any given (unknown) problem. A quite common figure regarding overfit is the following:

Gradient boosting is a machine learning technique used in regression and classification tasks, among others. This can be guarded against with several different methods that can improve the performance of a gbm. In the definition above, we trained the additional models only on the residuals. Implementing Gradient Boosting Regression in Python Paperspace Blog, Gradient boosting is a machine learning technique used in regression and classification tasks, among others.