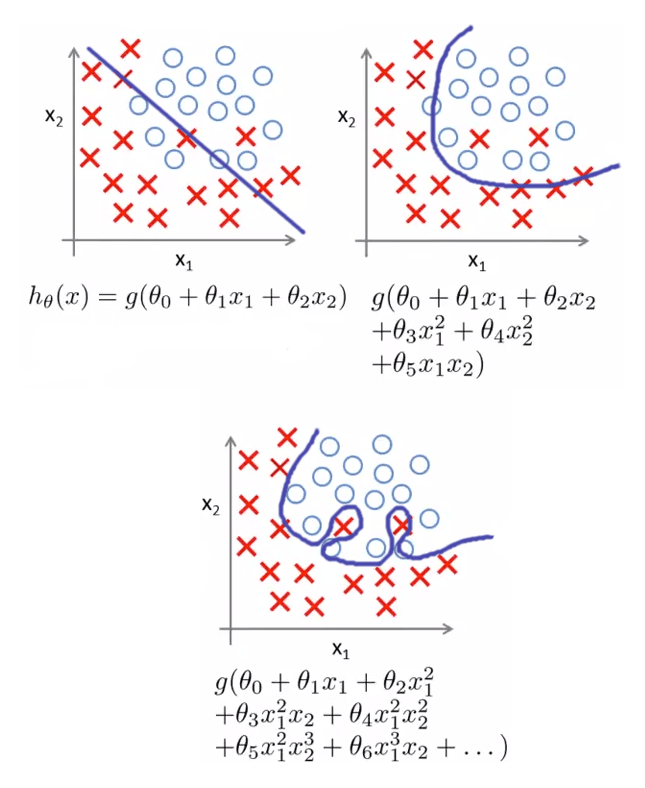

Overfitting mainly happens when model complexity is higher than the data complexity. The resulting model is not capturing the relationship between input and output well enough.

What Is ‘Overfitting’ In Machine Learning, While involving the process of learning overfitting occurs. The machine learning algorithm performs poorly on the training dataset if it cannot derive features from the training set.

Because of this, the model starts caching noise and inaccurate. When you train a neural network, you have to avoid overfitting. To solve the problem of overfitting inour model we need to increase flexibility of our model. Choose one of the folds to be the holdout set.

But What Is Overfitting in Machine Learning? YouTube What is the meaning of overfitting in machine learning? To solve the problem of overfitting inour model we need to increase flexibility of our model. Our ancestors say that anything in over causes destruction and their wisdom is also applied to machine learning algorithms too, overfitting is also a condition where our machine learning model learns way too much than.

How to Handle Overfitting and Underfitting in Machine Learning by Building a machine learning model is not just about feeding the data, there is a lot of deficiencies that affect the accuracy of any model. If your data is too poor, your model will have difficulty learning the desired objective and you will end up. Training relatively small architectures on an algorithmically generated dataset, alethea power and colleagues at openai.

Overfitting And Underfitting In Machine Learning by Ritesh Ranjan Training relatively small architectures on an algorithmically generated dataset, alethea power and colleagues at openai observed that ongoing training leads to an effect they call grokking, in which a transformer’s ability to generalize to novel data emerges well after overfitting. Randomly divide a dataset into k groups, or “folds”, of roughly equal size. Because of this, the model starts caching.

Machine learning Hiện tượng Overfitting, Regularization YouTube Overfitting mainly happens when model complexity is higher than the data complexity. Overfitting/strong> can be seen in machine learning when a statistical model describe</p> It means that model has already captured the common patterns and also it has captured noises too. To solve the problem of overfitting inour model we need to increase flexibility of our model. What is overfitting.

Hazard of Overfitting What is Overfitting? Machine Learning The machine learning algorithm performs poorly on the training dataset if it cannot derive features from the training set. Robots are programed so that they can perform the task based on data they gather from sensors. A statistical model is said to be overfitted when we train it with a lot of data (just like fitting ourselves in oversized pants!)..

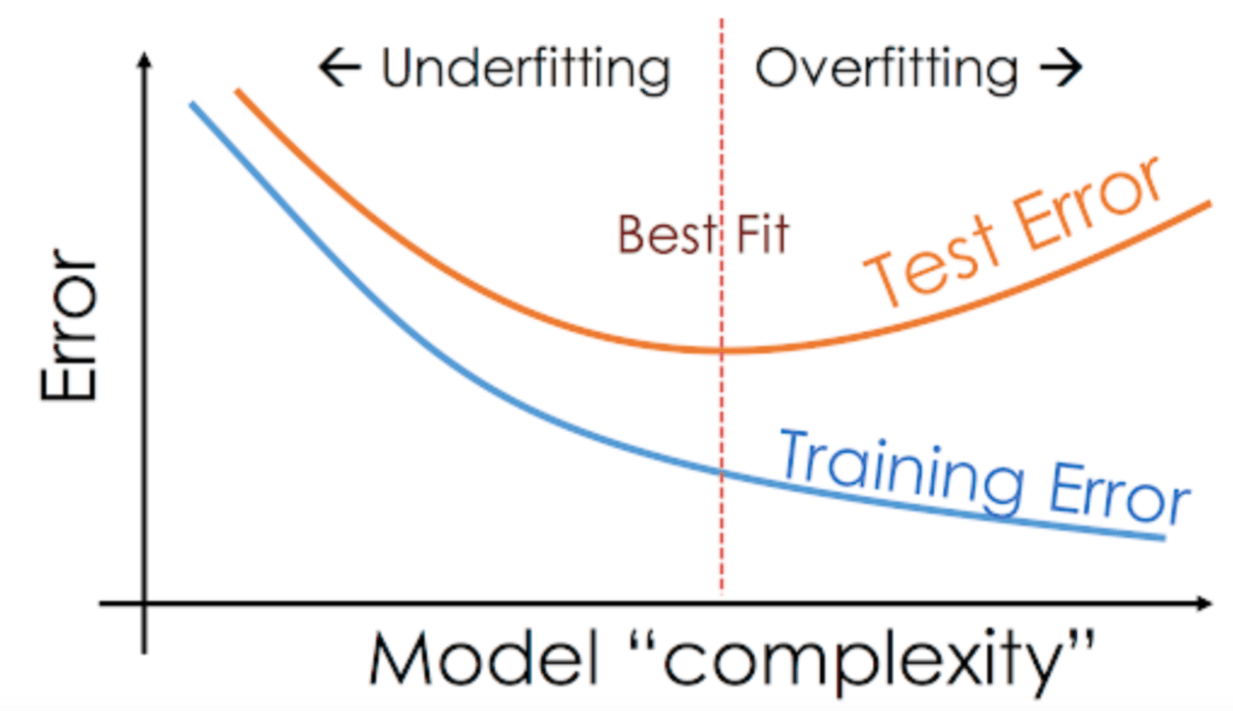

Overfitting and Underfitting in Machine Learning When a statistical model describes random error or noise instead of underlying relationship overfitting occurs. From the above image, we can see that capacity plays an important role in building a good machine learning model. Randomly divide a dataset into k groups, or “folds”, of roughly equal size. It occurs when a machine learning algorithm or statistical model captures the.

Overfitting and Underfitting of a machine learning model It is the case where model performance on the training dataset is improved at the cost of worse performance on data not seen during training, such as a holdout test dataset or new data. Photo by dex ezekiel on unsplash. Randomly divide a dataset into k groups, or “folds”, of roughly equal size. Our ancestors say that anything in over.

Logistic Regression Machine Learning, Deep Learning, and Computer Vision Overfitting happens when a model learns the detail and noise in the training data to the extent that it negatively impacts the performance of the model on new data. The scenario in which the model performs well in the training phase but gives a poor accuracy in the test dataset is called overfitting. Keep in mind that if the capacity.

Machine Learning with Python Underfitting and Overfitting If our model does much better on the training set than on the test set, then we’re likely overfitting. For example, it would be a big red flag if our model saw 99% accuracy on the training set but only 55% accuracy on the test set. Overfitting in machine learning is one such deficiency in machine learning that hinders the.

What is Overfitting? IBM This means that the model picks up on noise or random fluctuations in the training data and learns them as ideas. The following topics are covered in this article: Before that let’s understand what overfitting and underfitting are first. Training relatively small architectures on an algorithmically generated dataset, alethea power and colleagues at openai observed that ongoing training leads to.

Overfitting & Underfitting Concepts & Interview Questions Data Analytics The resulting model is not capturing the relationship between input and output well enough. Overfitting refers to a model that models the training data too well. Overfitting in machine learning is one such deficiency in machine learning that hinders the accuracy as well as the performance of the model. To solve the problem of overfitting inour model we need to.

Overfitting and Underfitting in Machine Learning Fireblaze AI School In some ways, overfitting stems from issues with how the original data model was built, creating gaps in the machine’s understanding. For example, it would be a big red flag if our model saw 99% accuracy on the training set but only 55% accuracy on the test set. While the above is the established definition of overfitting, recent research (pdf,.

IAML8.3 Examples of overfitting and underfitting YouTube Because of this, the model starts caching noise and inaccurate. Overfitting occurs when our machine learning model tries to cover all the data points or more than the required data points present in the given dataset. While involving the process of learning overfitting occurs. Overfitting models produce good predictions for data points in the training set but perform poorly on.

Jason�s Notes Bias vs Variance in machine learning Keep in mind that if the capacity of the model is higher than the complexity of the data, then the model can overfit. Overfitting mainly happens when model complexity is higher than the data complexity. What is the meaning of overfitting in machine learning? This condition is called underfitting. In machine learning when the model performs well on the training.

Machine Learning Detect Overfitting mahines The following topics are covered in this article: It means that model has already captured the common patterns and also it has captured noises too. Overfitting happens when the model is too complex relative to the amount and noisiness of the training data. When a model learns the information and noise in the training to the point where it degrades.

Overfitting and Underfitting. In Machine Leaning, model performance This means that the model picks up on noise or random fluctuations in the training data and learns them as ideas. Training relatively small architectures on an algorithmically generated dataset, alethea power and colleagues at openai observed that ongoing training leads to an effect they call grokking, in which a transformer’s ability to generalize to novel data emerges well after.

How to Reduce Overfitting in Machine Learning? It is the case where model performance on the training dataset is improved at the cost of worse performance on data not seen during training, such as a holdout test dataset or new data. You also looked at the various reasons for their occurrence. It takes a lot of computation to. The scenario in which the model performs well in.

Don’t Overfit! II — How to avoid Overfitting in your Machine Learning Overfitting occurs when our machine learning model tries to cover all the data points or more than the required data points present in the given dataset. While involving the process of learning overfitting occurs. Because of this, the model starts caching noise and inaccurate. For example, it would be a big red flag if our model saw 99% accuracy on.

Overfitting in Machine Learning Python Tutorial Machine Learning What is overfitting in machine learning? Overfitting happens when a model learns the detail and noise in the training data to the extent that it negatively impacts the performance of the model on new data. For example, it would be a big red flag if our model saw 99% accuracy on the training set but only 55% accuracy on the.

Overfitting And Underfitting in Machine Learning We can identify if a machine learning model has overfit. Randomly divide a dataset into k groups, or “folds”, of roughly equal size. What is overfitting in machine learning? Overfitting is an issue within machine learning and statistics where a model learns the patterns of a training dataset too well, perfectly explaining the training data set but failing to generalize.

Overfitting and Underfitting in Machine Learning Global Tech Council Choose one of the folds to be the holdout set. From the above image, we can see that capacity plays an important role in building a good machine learning model. For example, it would be a big red flag if our model saw 99% accuracy on the training set but only 55% accuracy on the test set. We can solve.

machine learning General question regarding Overfitting vs In some ways, overfitting stems from issues with how the original data model was built, creating gaps in the machine’s understanding. When a model gets trained with so much data, it starts learning from the noise and inaccurate data entries in our data set. What is overfitting in machine learning? Because of this, the model starts caching noise and inaccurate..

How to tackle overfitting via regularization in machine learning models This can happen for many reasons — importantly, that a model was built for specific outcomes rather than slightly more generalized ones. While the above is the established definition of overfitting, recent research (pdf, 1.2 mb) (link resides outside of ibm) indicates that complex models, such as deep learning models and neural networks, perform at a high accuracy despite being.

The problem of overfitting in machine learning algorithms Internal Keep in mind that if the capacity of the model is higher than the complexity of the data, then the model can overfit. It occurs when a machine learning algorithm or statistical model captures the noise of the data and shows low bias but high variance. In this tutorial, you learned the basics of overfitting and underfitting in machine learning.

What is underfitting and overfitting in machine learning and how to Choose one of the folds to be the holdout set. If our model does much better on the training set than on the test set, then we’re likely overfitting. Overfitting refers to a model that models the training data too well. For example, it would be a big red flag if our model saw 99% accuracy on the training set.

Because of this, the model starts caching noise and inaccurate. What is underfitting and overfitting in machine learning and how to.

For example, it would be a big red flag if our model saw 99% accuracy on the training set but only 55% accuracy on the test set. This condition is called underfitting. Keep in mind that if the capacity of the model is higher than the complexity of the data, then the model can overfit. The resulting model is not capturing the relationship between input and output well enough. Because of this, the model starts caching noise and inaccurate. Overfitting/strong> can be seen in machine learning when a statistical model describe</p>

Overfitting is an issue within machine learning and statistics where a model learns the patterns of a training dataset too well, perfectly explaining the training data set but failing to generalize its predictive power to other sets of data. The scenario in which the model performs well in the training phase but gives a poor accuracy in the test dataset is called overfitting. Our main objective in machine learning is to properly estimate the distribution and probability in training dataset so that we can have a generalized model that can predict the distribution and probability of the test dataset. What is underfitting and overfitting in machine learning and how to, If your data is too poor, your model will have difficulty learning the desired objective and you will end up.