But, we’re not here to win a kaggle challenge, but to learn how to prevent overfitting in our deep learning models. In machine learning, the result is to predict the probable output, and due to overfitting, it can hinder its accuracy big time.

What Is Overfitting In Deep Learning, One of the most important aims in any model is to make it such that it can generalize better for unseen data. Overfitting is a common explanation for the poor performance of a predictive model.

Overfitting is a common pitfall in deep learning algorithms, in which a model tries to fit the training data entirely and ends up memorizing the data patterns and the noise/random fluctuations. Overfitting in deep learning/machine learning is when the model starts memorizing the training data rather than using that information for generalizing unseen data. Building a machine learning model is not just about feeding the data, there is a lot of deficiencies that affect the accuracy of any model. Overfitting in machine learning is one such deficiency in machine learning that hinders the accuracy as well as the performance of the.

How to Handle Overfitting In Deep Learning Models While the above is the established definition of overfitting, recent research (pdf, 1.2 mb) (link resides outside of ibm) indicates that complex models, such as deep learning models and neural networks, perform at a high accuracy despite being trained to “exactly fit or interpolate.” this finding is directly at odds with the historical literature on this topic, and it. These.

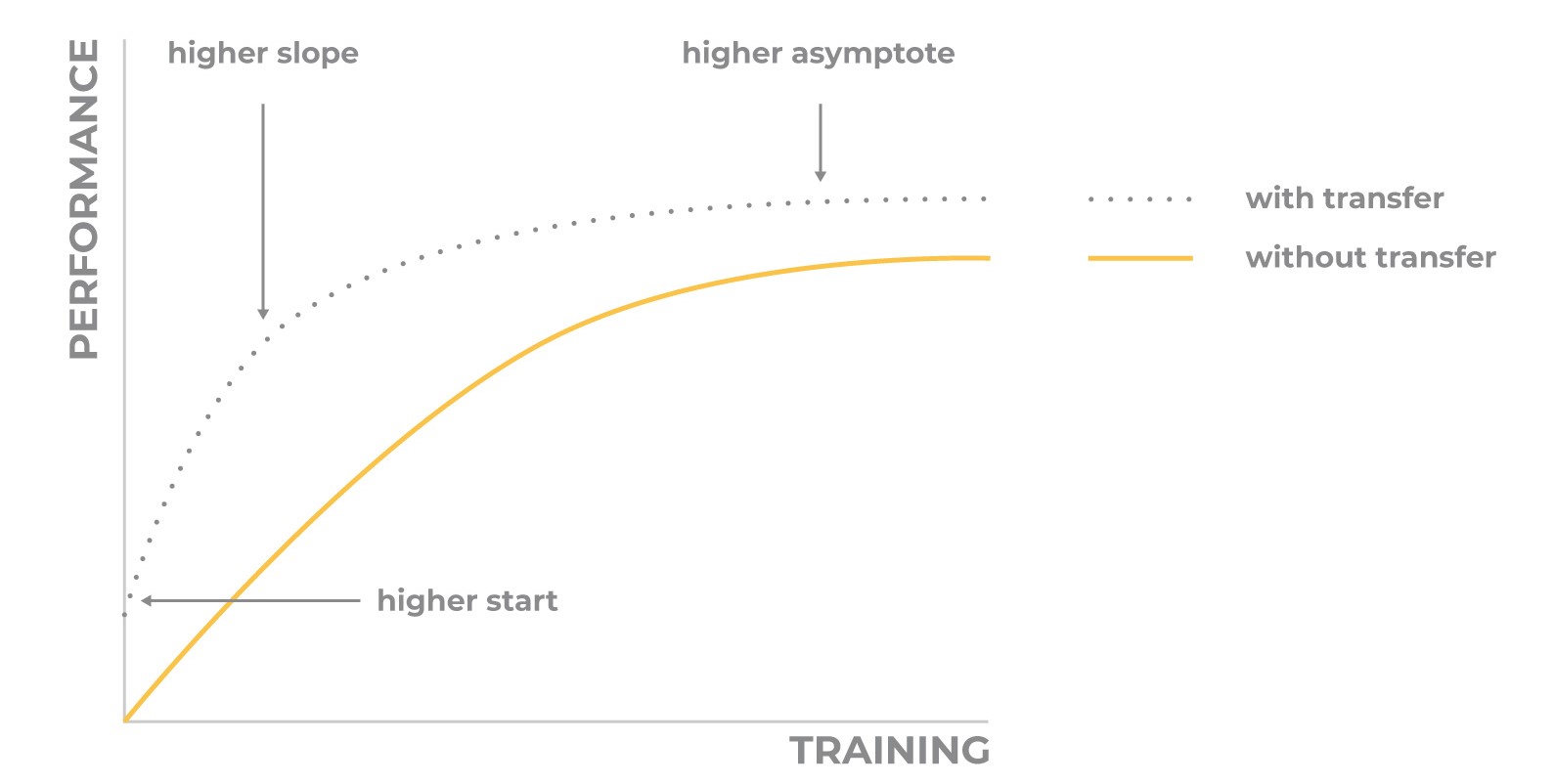

How to Handle Overfitting In Deep Learning Models One of the most important aims in any model is to make it such that it can generalize better for unseen data. The most effective way to prevent overfitting in deep learning networks is by: Training relatively small architectures on an algorithmically generated dataset, alethea power and colleagues at openai observed that ongoing training leads to an effect they call.

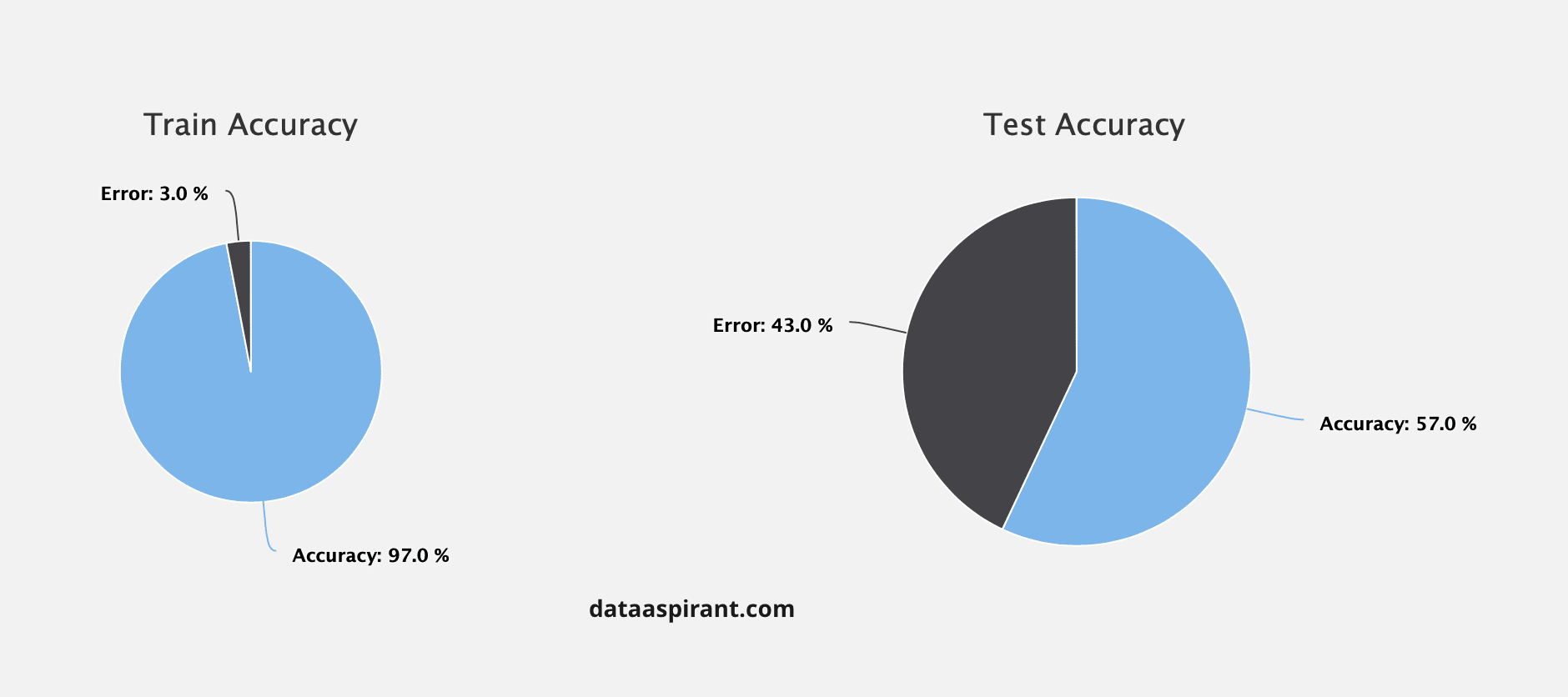

How to Handle Overfitting In Deep Learning Models To see how we can prevent overfitting, we first need to create a base model to compare the improved models to. For example, it would be a big red flag if our model saw 99% accuracy on the training set but only 55% accuracy on the test set. Building a machine learning model is not just about feeding the data,.

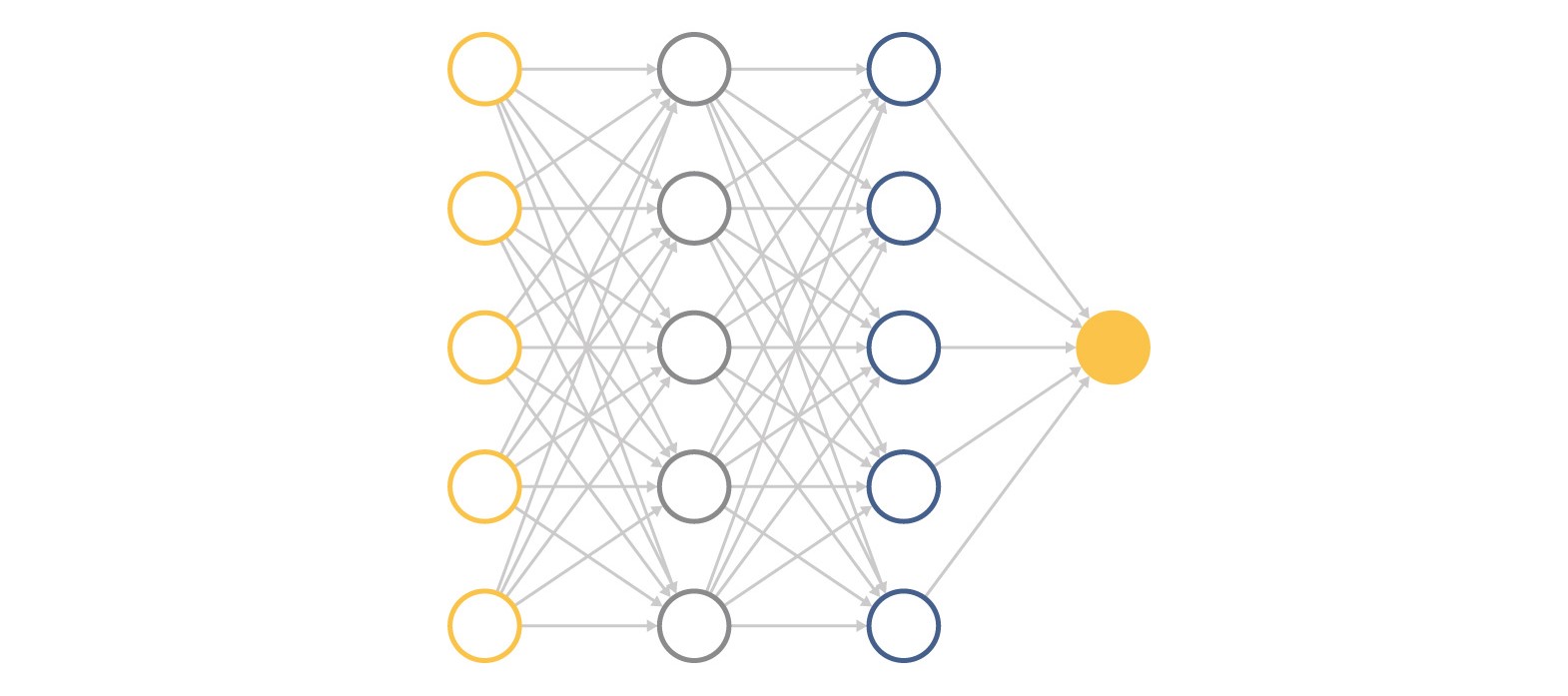

How to Handle Overfitting In Deep Learning Models In simpler words, when the algorithm starts paying too much attention to the small details. The key motivation for deep learning is to build algorithms that mimic the human brain. If our model does much better on the training set than on the test set, then we’re likely overfitting. New work found that overfitting isn’t the end of the line..

How to Handle Overfitting In Deep Learning Models Simply, when a machine learning model remembers the patterns in training data but fails to generalize it’s called overfitting. Understanding one helps us understand the other and vice versa. Overfitting occurs when you achieve a good fit of your model on the training data, but it does not generalize well on new, unseen data. Informally, capacity is its ability to.

How to Handle Overfitting In Deep Learning Models One of such problems is overfitting in machine learning. Overfitting in machine learning is one such deficiency in machine learning that hinders the accuracy as well as the performance of the. It takes a lot of computation to. It gives machines the ability to think and learn on their own. How to handle overfitting in deep learning models.

deep learning Similarity measure between training and testing loss to Overfitting is a problem that a model can exhibit. While the above is the established definition of overfitting, recent research (pdf, 1.2 mb) (link resides outside of ibm) indicates that complex models, such as deep learning models and neural networks, perform at a high accuracy despite being trained to “exactly fit or interpolate.” this finding is directly at odds with.

How to Handle Overfitting In Deep Learning Models The issue is that these notions do not apply to fresh data, limiting the models’ ability to generalize. Overfitting is a common explanation for the poor performance of a predictive model. Performing an analysis of learning dynamics is straightforward for. Understanding one helps us understand the other and vice versa. It gives machines the ability to think and learn on.

Fighting Overfitting in Deep Learning Overfitting is a common pitfall in deep learning algorithms, in which a model tries to fit the training data entirely and ends up memorizing the data patterns and the noise/random fluctuations. Performing an analysis of learning dynamics is straightforward for. Apr 6, 2022 2 min read. To see how we can prevent overfitting, we first need to create a base.

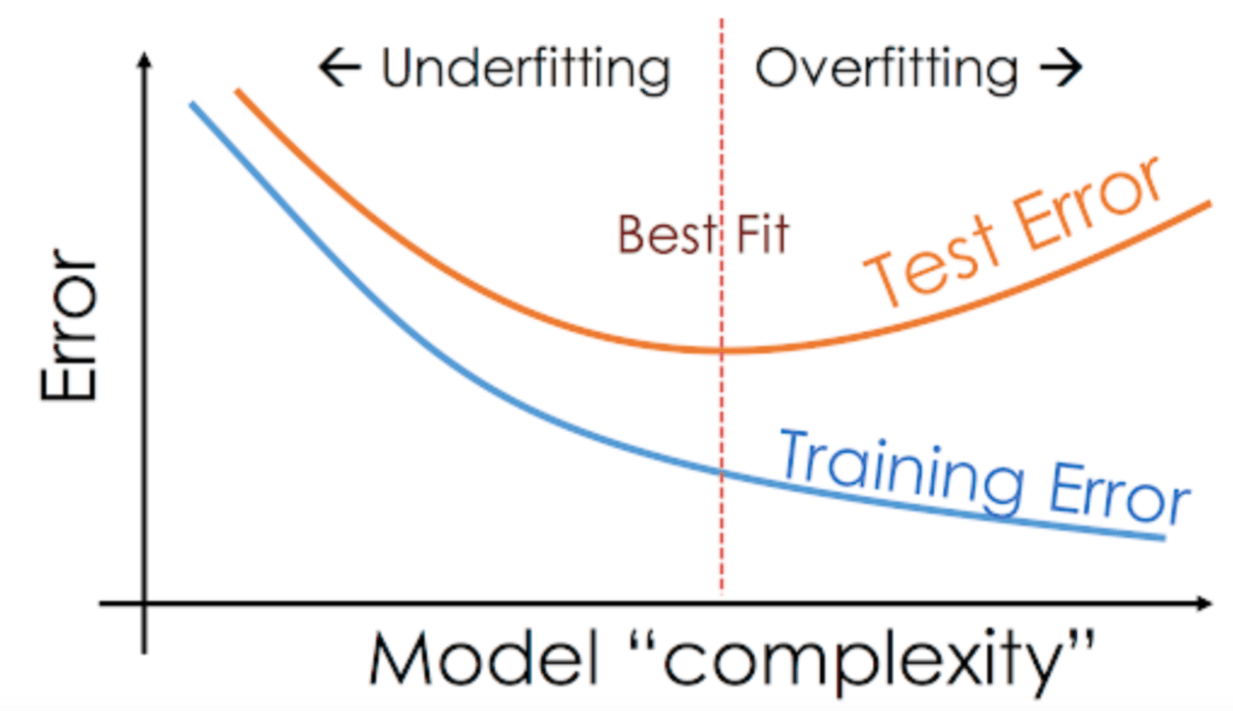

Machine Learning Explain Overfitting QMACHI The base model is a simple keras model with two hidden layers with 128 and 64. Overfitting is a problem that a model can exhibit. Informally, capacity is its ability to fit a wide variety of functions. Understanding one helps us understand the other and vice versa. One way of changing a model’s capacity is changing its.

Overfitting & Underfitting Concepts & Interview Questions Data Analytics In simpler words, when the algorithm starts paying too much attention to the small details. Deep learning is one of the most revolutionary technologies at present. These two concepts are interrelated and go together. Overfitting is an issue within machine learning and statistics where a model learns the patterns of a training dataset too well, perfectly explaining the training data.

Deep Learning Overfitting Towards Data Science These models fail to generalize and perform well in the case of unseen data scenarios, defeating the model�s purpose. While the above is the established definition of overfitting, recent research (pdf, 1.2 mb) (link resides outside of ibm) indicates that complex models, such as deep learning models and neural networks, perform at a high accuracy despite being trained to “exactly.

Deep Learning Overfitting Towards Data Science Simply, when a machine learning model remembers the patterns in training data but fails to generalize it’s called overfitting. Overfitting is a common pitfall in deep learning algorithms, in which a model tries to fit the training data entirely and ends up memorizing the data patterns and the noise/random fluctuations. Underfitting is when the training error is high. These models.

How to Handle Overfitting In Deep Learning Models The base model is a simple keras model with two hidden layers with 128 and 64. Informally, capacity is its ability to fit a wide variety of functions. Overfitting occurs when you achieve a good fit of your model on the training data, but it does not generalize well on new, unseen data. Apr 6, 2022 2 min read. To.

How to Reduce Overfitting of a Deep Learning Model with Weight Understanding one helps us understand the other and vice versa. A model with a low capacity is likely to underfit on the training set and a model with a high capacity is likely to overfit by memorizing detailed properties of the training set that are not present on the test set. The most effective way to prevent overfitting in deep.

Overfitting and Underfitting in Deep Learning » Ai Next Generation Overfitting is when the testing error is high compared to the training error, or the gap between the two is large. One of such problems is overfitting in machine learning. What are overfitting and underfitting? It affects the performance of the model if. New work found that overfitting isn’t the end of the line.

Deep Learning — Overfitting NewCryptoBlock Medium Overfitting is a common explanation for the poor performance of a predictive model. Nonparametric and nonlinear models, which have more flexibility when learning a target function, are more prone to overfitting. According to andrew ng, the best methods of dealing with an underfitting model is trying a bigger neural network (adding new layers or increasing the number of neurons in.

How to Handle Overfitting In Deep Learning Models In simpler words, when the algorithm starts paying too much attention to the small details. 10 / 11 blog from supervised learning. While the above is the established definition of overfitting, recent research (pdf, 1.2 mb) (link resides outside of ibm) indicates that complex models, such as deep learning models and neural networks, perform at a high accuracy despite being.

Don’t Overfit! II — How to avoid Overfitting in your Machine Learning In machine learning, the result is to predict the probable output, and due to overfitting, it can hinder its accuracy big time. These models fail to generalize and perform well in the case of unseen data scenarios, defeating the model�s purpose. While the above is the established definition of overfitting, recent research (pdf, 1.2 mb) (link resides outside of ibm).

Overfitting and Underfitting. Before getting to the topic, let’s see The most effective way to prevent overfitting in deep learning networks is by: It affects the performance of the model if. 10 / 11 blog from supervised learning. A model that overfits the training data is referred to as overfitting; Overfitting in deep learning/machine learning is when the model starts memorizing the training data rather than using that information for.

How to Handle Overfitting In Deep Learning Models The key motivation for deep learning is to build algorithms that mimic the human brain. When a model gets trained with so much data, it starts learning from the noise and inaccurate data entries in our data set. To see how we can prevent overfitting, we first need to create a base model to compare the improved models to. Overfitting.

Overfitting And Underfitting In Machine Learning by Ritesh Ranjan Apr 6, 2022 2 min read. Overfitting is a problem that a model can exhibit. These two concepts are interrelated and go together. In other words, the model learned patterns specific to the training data, which are irrelevant in other data. These two factors correspond to the two central challenges in machine learning:

Fighting Overfitting in Deep Learning Training relatively small architectures on an algorithmically generated dataset, alethea power and colleagues at openai observed that ongoing training leads to an effect they call grokking, in which a transformer’s ability to generalize to novel data emerges well after overfitting. How to handle overfitting in deep learning models. We can identify overfitting by looking at validation metrics like loss or.

How to Handle Overfitting In Deep Learning Models One of the most important aims in any model is to make it such that it can generalize better for unseen data. Building a machine learning model is not just about feeding the data, there is a lot of deficiencies that affect the accuracy of any model. A statistical model is said to be overfitted when we train it with.

How to Handle Overfitting In Deep Learning Models A statistical model is said to be overfitted if it can’t generalize well with unseen data. To achieve this we need to feed as much as relevant data for the models to learn. Overfitting is when the testing error is high compared to the training error, or the gap between the two is large. In other words, the model learned.

New work found that overfitting isn’t the end of the line. How to Handle Overfitting In Deep Learning Models.

But, we’re not here to win a kaggle challenge, but to learn how to prevent overfitting in our deep learning models. Deep learning is one of the most revolutionary technologies at present. It takes a lot of computation to. A model that overfits the training data is referred to as overfitting; In simpler words, when the algorithm starts paying too much attention to the small details. We can identify overfitting by looking at validation metrics like loss or accuracy.

A statistical model is said to be overfitted when we train it with a lot of data (just like fitting ourselves in oversized pants!). Overfitting mainly happens when model complexity is higher than the data complexity. To achieve this we need to feed as much as relevant data for the models to learn. How to Handle Overfitting In Deep Learning Models, According to andrew ng, the best methods of dealing with an underfitting model is trying a bigger neural network (adding new layers or increasing the number of neurons in existing layers) or training the model a little bit longer.