Overfitting occurs when the model is too complex for. Supervised machine learning is best understood as approximating a.

What Is Meant By Overfitting In Machine Learning, The training data set in machine learning is the actual dataset used to train the model for performing various actions. It occurs when we build models that closely explain a training data set, but fail to generalize when applied to other data sets.

These two concepts are interrelated and go together. Overfitting the model generally takes the form of making an overly complex. The opposite of overfitting is underfitting. When a machine learning algorithm starts to register noise within the data, we call it overfitting.

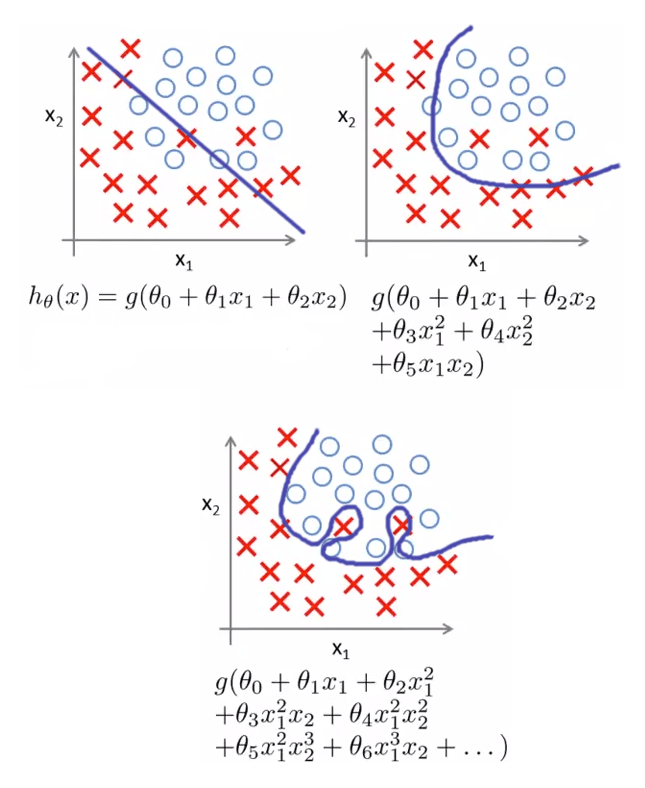

IAML8.3 Examples of overfitting and underfitting YouTube It occurs when we build models that closely explain a training data set, but fail to generalize when applied to other data sets. Moving from model a to model b. A model with a lower training performance and/or a higher test performance), which is: Overfitting is a condition that occurs when a machine learning or deep neural network model performs.

How to tackle overfitting via regularization in machine learning models The following topics are covered in this article: Overfitting is the result of an ml model placing importance on relatively unimportant information in the training data. (it’s just like trying to fit undersized pants!) underfitting destroys the accuracy of our machine learning model. Supervised machine learning is best understood as approximating a. In simpler words, when the algorithm starts paying.

What is Overfitting? IBM Understanding one helps us understand the other and vice versa. It means that the model works well on the training data, but it does not generalize well. It could be because there are way too many features in the data or because we have not supplied enough data. · elastic net method (regression) · lasso method (regression) · ridge method.

overfitting and underfitting in machine learning YouTube Building a machine learning model is not just about feeding the data, there is a lot of deficiencies that affect the accuracy of any model. Overfitting occurs when our machine learning model tries to cover all the data points or more than the required data points present in the given dataset. When an ml model has been overfit, it can�t.

Overfitting and Underfitting in Machine Learning In machine learning terminology, underfitting means that a model is too general, leading to high bias, while overfitting means that a model is too specific, leading to high variance. To solve the problem of overfitting inour model we need to increase flexibility of our model. An underfit machine learning model is not a suitable model and will be obvious as.

Techniques for handling underfitting and overfitting in Machine With machine learning becoming an essential technology in computational biology, we must include training about overfitting in all courses that introduce this technology to students and practitioners. It occurs when we build models that closely explain a training data set, but fail to generalize when applied to other data sets. Supervised learning in machine learning is one method for the.

Overfitting and Underfitting. In Machine Leaning, model performance Supervised learning in machine learning is one method for the model to learn and understand data. When an ml model has been overfit, it can�t make accurate. There are other types of learning, such as unsupervised and reinforcement learning, but those are topics for another time and another blog post. Overfitting occurs when your model has learnt the training data.

Don’t Overfit! II — How to avoid Overfitting in your Machine Learning In reality, underfitting is probably better than overfitting, because at least your model is performing to some expected standard. It means that the model works well on the training data, but it does not generalize well. Overfitting is a part of life as a data scientist. Supervised learning in machine learning is one method for the model to learn and.

A Visual Look at Under and Overfitting using U.S. States In simpler words, when the algorithm starts paying too much attention to the small details. What is meant by test data? In machine learning terminology, underfitting means that a model is too general, leading to high bias, while overfitting means that a model is too specific, leading to high variance. An underfit machine learning model is not a suitable model.

Hazard of Overfitting What is Overfitting? Machine Learning Overfitting is an error that occurs in data modeling as a result of a particular function aligning too closely to a minimal set of. It means that the model works well on the training data, but it does not generalize well. This means that knowing “how off” the model’s predictions are is a matter of knowing how close it is.

A Theory of Overfitting and Underfitting in Machine Learning To solve the problem of overfitting inour model we need to increase flexibility of our model. When an ml model has been overfit, it can�t make accurate. Because of this, the model starts caching noise and inaccurate. Overfitting and underfitting with machine learning algorithms approximate a target function in machine learning. It could be because there are way too many.

Overfitting and Underfitting in Machine Learning Fireblaze AI School It could be because there are way too many features in the data or because we have not supplied enough data. The training data set in machine learning is the actual dataset used to train the model for performing various actions. Building a machine learning model is not just about feeding the data, there is a lot of deficiencies that.

Overfitting and Underfitting of a machine learning model In machine learning, this is called overfitting: In simpler words, when the algorithm starts paying too much attention to the small details. Overfitting is an error that occurs in data modeling as a result of a particular function aligning too closely to a minimal set of. With machine learning becoming an essential technology in computational biology, we must include training.

Overfitting And Underfitting in Machine Learning Get your free algorithms mind map. · elastic net method (regression) · lasso method (regression) · ridge method (regression) · relu activation function (neural networks) · reducing number of hidden layers (neural network) · pruning (decision tree regression and. It can be detected by testing. There are other types of learning, such as unsupervised and reinforcement learning, but those are.

Overfitting and Underfitting in Machine Learning Global Tech Council Overfitting is a condition that occurs when a machine learning or deep neural network model performs significantly better for training data than it does for new data. In machine learning, the result is to predict the probable output, and due to overfitting, it can hinder its accuracy big time. Overfitting occurs when the model is too complex for. It could.

Overfitting in Machine Learning Python Tutorial Machine Learning Supervised machine learning is best understood as approximating a. Its occurrence simply means that our model or the algorithm does not fit the data well enough. This means that knowing “how off” the model’s predictions are is a matter of knowing how close it is to overfitting or underfitting. When an ml model has been overfit, it can�t make accurate..

But What Is Overfitting in Machine Learning? YouTube Overfitting is a condition that occurs when a machine learning or deep neural network model performs significantly better for training data than it does for new data. Understanding one helps us understand the other and vice versa. An overfitting model is a model that has learned many wrong patterns. The algorithm copies the training data’s trends and this results in.

Overfitting And Underfitting In Machine Learning by Ritesh Ranjan A machine learning model is meant to learn patterns. Overfitting occurs when our machine learning model tries to cover all the data points or more than the required data points present in the given dataset. In machine learning, this is called overfitting: The following topics are covered in this article: In machine learning terminology, underfitting means that a model is.

Machine Learning with Python Underfitting and Overfitting Overfitting occurs when our machine learning model tries to cover all the data points or more than the required data points present in the given dataset. The problem is determining which part to ignore. The worst case scenario is when you tell your boss you have an amazing new model that will change the world, only for it to crash.

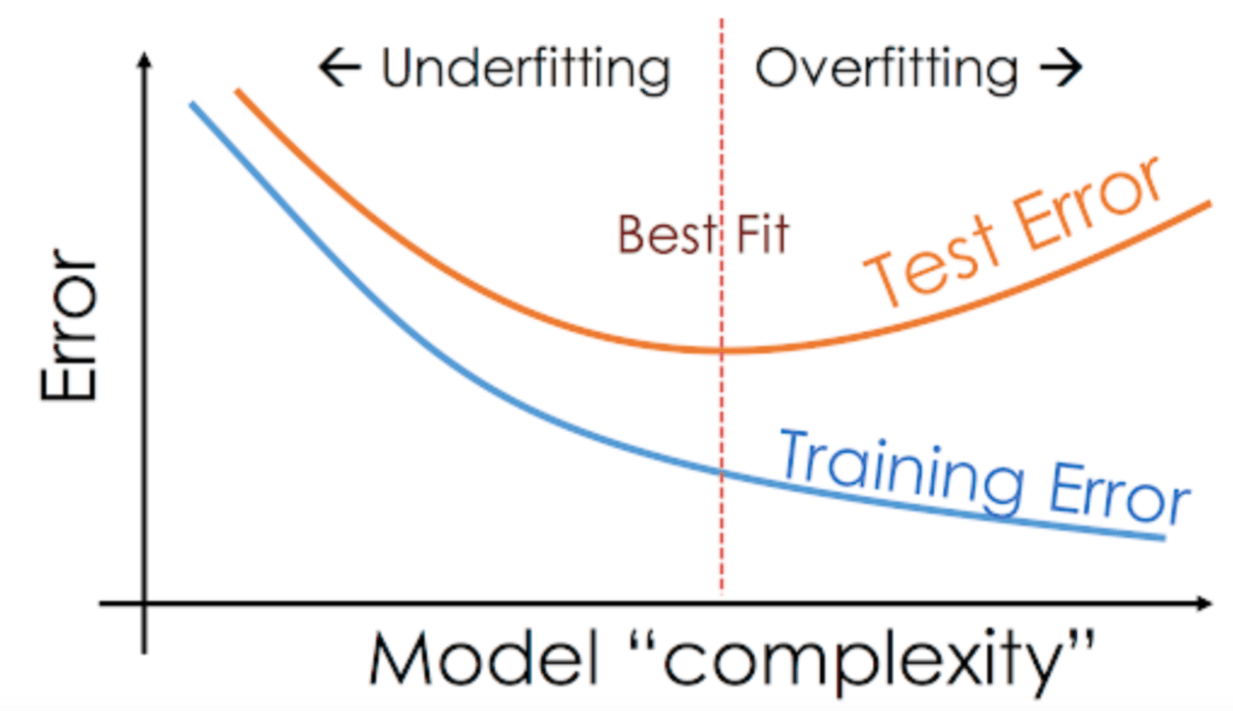

Overfitting and Underfitting in Machine Learning An overfitting model is a model that has learned many wrong patterns. It occurs when we build models that closely explain a training data set, but fail to generalize when applied to other data sets. Errors that arise in machine learning approaches, both during the training of a new model (blue line) and the application of a built model (red.

Overfitting & Underfitting Concepts & Interview Questions Data Analytics Overfitting the model generally takes the form of making an overly complex. These two concepts are interrelated and go together. The opposite of overfitting is underfitting. In machine learning terminology, underfitting means that a model is too general, leading to high bias, while overfitting means that a model is too specific, leading to high variance. Supervised machine learning is best.

How to Handle Overfitting and Underfitting in Machine Learning by The training data set in machine learning is the actual dataset used to train the model for performing various actions. In machine learning, this is called overfitting: Overfitting and underfitting with machine learning algorithms approximate a target function in machine learning. The opposite of overfitting is underfitting. Supervised machine learning is best understood as approximating a.

Generalization, Regularization, Overfitting, Bias and Variance in Its occurrence simply means that our model or the algorithm does not fit the data well enough. Understanding one helps us understand the other and vice versa. When an ml model has been overfit, it can�t make accurate. Overfitting and underfitting with machine learning algorithms approximate a target function in machine learning. Moving from model a to model b.

Logistic Regression Machine Learning, Deep Learning, and Computer Vision It can be detected by testing. With machine learning becoming an essential technology in computational biology, we must include training about overfitting in all courses that introduce this technology to students and practitioners. It could be because there are way too many features in the data or because we have not supplied enough data. Overfitting the model generally takes the.

The problem of overfitting in machine learning algorithms Internal These two concepts are interrelated and go together. Errors that arise in machine learning approaches, both during the training of a new model (blue line) and the application of a built model (red line). Overfitting is an issue within machine learning and statistics. · elastic net method (regression) · lasso method (regression) · ridge method (regression) · relu activation function.

There are other types of learning, such as unsupervised and reinforcement learning, but those are topics for another time and another blog post. The problem of overfitting in machine learning algorithms Internal.

A model with a lower training performance and/or a higher test performance), which is: It is only with supervised learning that overfitting is a potential problem. In machine learning terminology, underfitting means that a model is too general, leading to high bias, while overfitting means that a model is too specific, leading to high variance. Sample of the handy machine learning algorithms mind map. A machine learning model is meant to learn patterns. Overfitting occurs when a model begins to memorize training data rather than learning to generalize from trend.

The problem is determining which part to ignore. Building on that idea, terms like overfitting and underfitting refer to deficiencies that the model’s performance might suffer from. High variance gives rise to overfitting. The problem of overfitting in machine learning algorithms Internal, Underfitting refers to a model that can neither model the training dataset nor generalize to new dataset.