Api’s (request module) json and csv files; All of these capabilities and more combine to make snakemake a powerful and flexible framework for creating data analysis pipelines.

Python Data Pipeline Example, Once the tables are created and the dependencies installed, edit. Install the state tool on windows using powershell:

Follow the instructions provided in my python data pipeline github repository to run the code in. Create a pandas array for interval data; Overall luigi provides a framework to develop and. Here is the python code example for creating sklearn pipeline, fitting the pipeline and using the pipeline for prediction.

Data pipeline using Apache Beam Python SDK on Dataflow by Rajesh We’ll cover static analysis as a step in continuous integration and continuous delivery (ci/cd) with an example in python. Mongodb (pymongo module) let’s dig into coding our pipeline and figure out how all these concepts are applied in code. We will also redefine these labels for consistency. You can vote up the ones you like or vote down the ones.

Data Pipeline Implementation TrackIt Cloud Consulting & S/W Development For information about citing data sets in publication. Api’s (request module) json and csv files; Create a pandas array for interval data; Scripts/launch_dataflow_runner.sh and set your project id and region, and. It runs spark/python code without managing infrastructure at a nominal cost.

"Building IoT Data Pipelines with Python" by Logan Wendholt YouTube Pipeline is instantiated by passing different components/steps of pipeline related to feature scaling, feature extraction and estimator for prediction. Api’s (request module) json and csv files; Run the wordcount example pipeline from the apache_beam package on the. Here is the python code example for creating sklearn pipeline, fitting the pipeline and using the pipeline for prediction. For example, ‘last update’.

Overview of Kubeflow Pipelines Kubeflow The task of luigi should wrapped into a class. Ingesting data from a file into bigquery. A filesystem where the video data lands and a. In our case, it will be the dedup data frame from the last defined step. Getting from raw logs to visitor counts per day.

How to Read from stdin in Python With Examples All Learning These examples are extracted from open source projects. In this post, you will discover the right tools and methods of building data pipelines in python. Pipeline is instantiated by passing different components/steps of pipeline related to feature scaling, feature extraction and estimator for prediction. Snakemake can display the graph, the example shown above, which allows users to understand what the.

Study List for Data Engineers and Aspiring Data Architects DZone Big Data X_train, x_test, y_train, y_test = train_test_split (x, y,random_state=0) is used to split the dataset into train data and test data. You may view all data sets through our searchable interface. In this post, you will discover the right tools and methods of building data pipelines in python. The examples are solutions to common use cases we see in the field..

Building An Analytics Data Pipeline In Python BLOCKGENI BLOCKGENI In our case, it will be the dedup data frame from the last defined step. The outputs will be written to the bigquery tables, and in. I am currently using it for all of the data. In this lesson, you’ll learn how to use generator expressions to build a data pipeline. Standard scaler() removes the values from a mean and.

Python Data Visualization with Matplotlib — Part 2 by Rizky Maulana N Luigi automatically works out what tasks it needs to run to complete a requested job. The examples are solutions to common use cases we see in the field. We’ll cover static analysis as a step in continuous integration and continuous delivery (ci/cd) with an example in python. Like with the extract process, a simple etl pipeline might have a single.

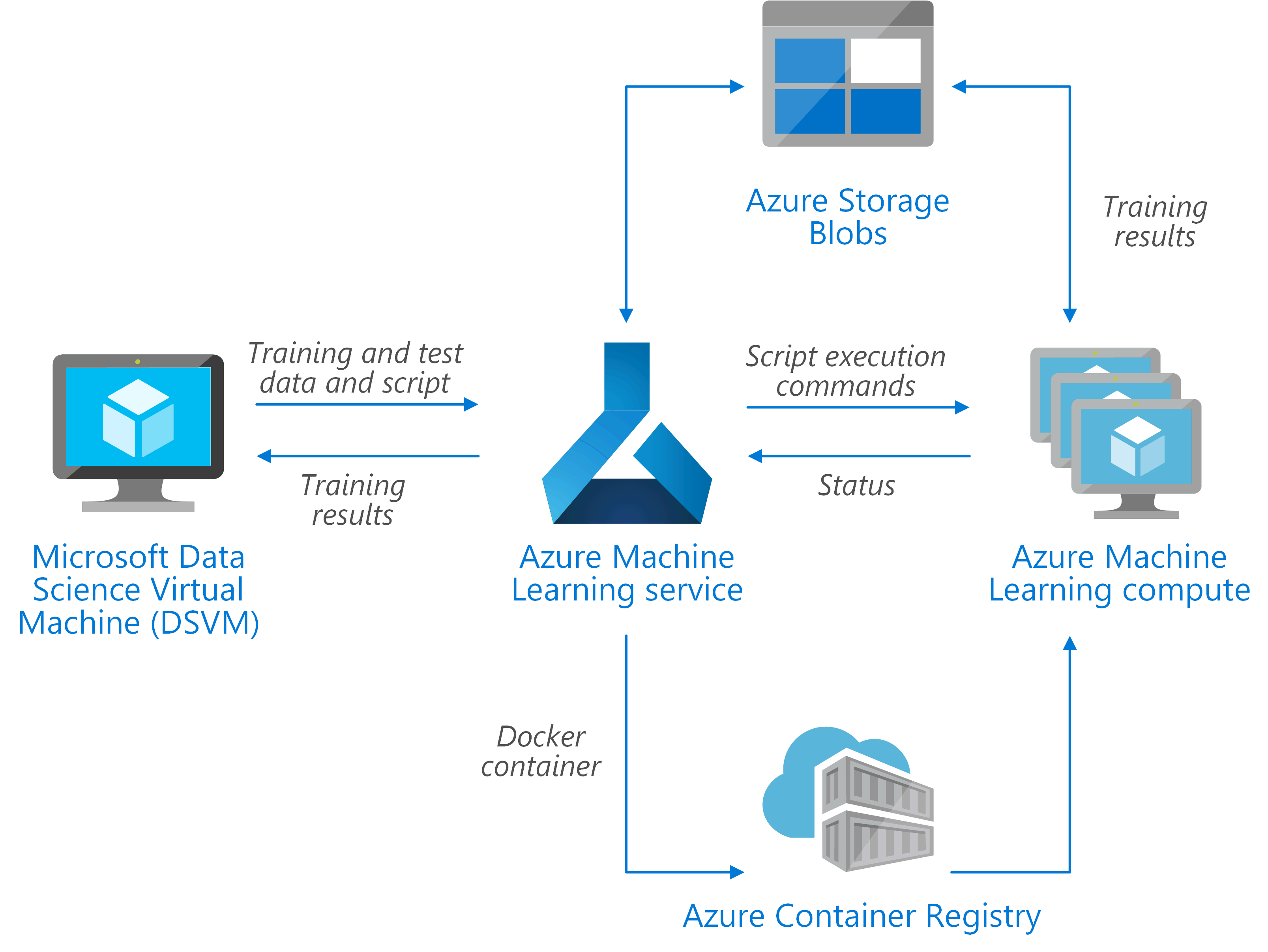

Training Python models on Azure Azure Architecture Center Microsoft This method returns the last object pulled out from the stream. As you can see above, we go from raw log data to a dashboard where we can see visitor counts per day. And task d depends on the output of task b and task c. Like with the extract process, a simple etl pipeline might have a single output.

A Flexible PySpark Job (Spark Job in Python) Script Template by For example, task b depends on the output of task a. Api’s (request module) json and csv files; The following are some of the points covered in the code below: This repo contains several examples of the dataflow python api. Use a standardized naming scheme and formatting style, and be descriptive.

ETL Your Data Pipelines with Python and PostgreSQL by Sean Bradley Luigi automatically works out what tasks it needs to run to complete a requested job. This includes your future self, a.k.a. Use a standardized naming scheme and formatting style, and be descriptive. I am currently using it for all of the data. Ingest data from files into bigquery reading the file structure from datastore.

How to use Python for data engineering in ADF Neal Analytics Second, write a second code for the pipelines. For information about citing data sets in publication. The following are 30 code examples for showing how to use sklearn.pipeline.pipeline(). Mongodb (pymongo module) let’s dig into coding our pipeline and figure out how all these concepts are applied in code. We’ll cover static analysis as a step in continuous integration and continuous.

How to Read from stdin in Python With Examples All Learning Here’s a simple example of a data pipeline that calculates how many visitors have visited the site each day: Individuals use this python data pipeline framework to create a flexible and scalable database. Data analytics example with etl in python. The following are 30 code examples for showing how to use sklearn.pipeline.pipeline(). Create a pandas array for interval data;

ETL Your Data Pipelines with Python and PostgreSQL by Sean Bradley The apache beam sdk is an open source programming model for data pipelines. In this post, you will discover the right tools and methods of building data pipelines in python. They automate as much of the development process as possible. This is a very basic etl pipeline so we will only consider a. Download and install the data pipeline build,.

Event Data Pipelines with Redis Pub/Sub, Async Python and Dash by We’ll cover static analysis as a step in continuous integration and continuous delivery (ci/cd) with an example in python. Download and install the data pipeline build, which contains a version of python and all the tools listed in this post so you can test them out for yourself: Here’s a simple example of a data pipeline that calculates how many.

Using Matplotlib (or similar) to Visualize Data Pipelines? Python The outputs will be written to the bigquery tables, and in. Like with the extract process, a simple etl pipeline might have a single output (say, a relational database for a web application), but more complicated pipelines could have many. In our case, it will be the dedup data frame from the last defined step. Create a pandas array for.

Developing Public Data Pipelines with Python CoProcure Medium It takes 2 important parameters, stated as follows: A serverless data integration service makes it easy to discover, prepare, and combine data for analytics, machine learning, and application development. Data pipelines allow you to string together code to process large datasets or streams of data without maxing out your machine’s memory. Download and install the data pipeline build, which contains.

How we create cleaned, reproducable data for use in projects and apps Download and install the data pipeline build, which contains a version of python and all the tools listed in this post so you can test them out for yourself: Dataflow no longer supports pipelines using python 2. As you can see above, we go from raw log data to a dashboard where we can see visitor counts per day. This.

Python CSV Files and 2D Arrays Passy World of ICT We will also redefine these labels for consistency. In this lesson, you’ll learn how to use generator expressions to build a data pipeline. Here’s a simple example of a data pipeline that calculates how many visitors have visited the site each day: For a general overview of the repository, please visit our about page. This is a very basic etl.

![[O�Reilly] Data Pipelines with Python Free Download » FreeTuts Download [O�Reilly] Data Pipelines with Python Free Download » FreeTuts Download](https://i2.wp.com/freetutsdownload.net/wp-content/uploads/2018/08/1481145411_2.jpg)

[O�Reilly] Data Pipelines with Python Free Download » FreeTuts Download Luigi automatically works out what tasks it needs to run to complete a requested job. Run the wordcount example pipeline from the apache_beam package on the. This repo contains several examples of the dataflow python api. The poor sap who will be stuck trying to decipher your caffeine addled hieroglyphics at 2am when a critical pipeline breaks. Once the tables.

Intro to Building Data Pipelines in Python with Luigi Individuals use this python data pipeline framework to create a flexible and scalable database. Once the tables are created and the dependencies installed, edit. The following are 30 code examples for showing how to use sklearn.pipeline.pipeline(). You may view all data sets through our searchable interface. You pay only during the run time of the job.

Kedro (Python template for productionquality ML data pipelines) Index X, y = make_classification (random_state=0) is used to make classification. The uc irvine machine learning repository is a machine learning repository which maintains 585 data sets as a service to the machine learning community. This method returns the last object pulled out from the stream. For example, ‘last update’ is also called ‘last_update’. Check out the source code on github.

Download Building Data Pipelines with Python SoftArchive For this example, you’ll use a csv file that is pulled from the techcrunch continental usa dataset, which describes. All of these capabilities and more combine to make snakemake a powerful and flexible framework for creating data analysis pipelines. This repo contains several examples of the dataflow python api. For a general overview of the repository, please visit our about.

python Multiple pipelines that merge within a sklearn Pipeline Here is the python code example for creating sklearn pipeline, fitting the pipeline and using the pipeline for prediction. For information about citing data sets in publication. This repo contains several examples of the dataflow python api. The apache beam sdk is an open source programming model for data pipelines. X_train, x_test, y_train, y_test = train_test_split (x, y,random_state=0) is used.

Python reading file, writing and appending to file YouTube For example, if your pipeline is processing large volumes of compressed video and tabular metadata about those videos, you might have two output streams: Install the state tool on windows using powershell: X_train, x_test, y_train, y_test = train_test_split (x, y,random_state=0) is used to split the dataset into train data and test data. A serverless data integration service makes it easy.

In this lesson, you’ll learn how to use generator expressions to build a data pipeline. Python reading file, writing and appending to file YouTube.

Install the state tool on windows using powershell: You can vote up the ones you like or vote down the ones you don�t like, and go to the original project or source file by following the links above each example. For example, if your pipeline is processing large volumes of compressed video and tabular metadata about those videos, you might have two output streams: Mongodb (pymongo module) let’s dig into coding our pipeline and figure out how all these concepts are applied in code. Ingesting data from a file into bigquery. This is a very basic etl pipeline so we will only consider a.

You can vote up the ones you like or vote down the ones you don�t like, and go to the original project or source file by following the links above each example. We’ll cover static analysis as a step in continuous integration and continuous delivery (ci/cd) with an example in python. The uc irvine machine learning repository is a machine learning repository which maintains 585 data sets as a service to the machine learning community. Python reading file, writing and appending to file YouTube, Individuals use this python data pipeline framework to create a flexible and scalable database.