It can be used in finance to analyze stock data and forecast returns. Principal component analysis (pca) is an unsupervised machine learning technique.

Pca Machine Learning Explained, Pca can be performed in 6 steps: Principal component analysis has paved a perfect path for dimension reduction.

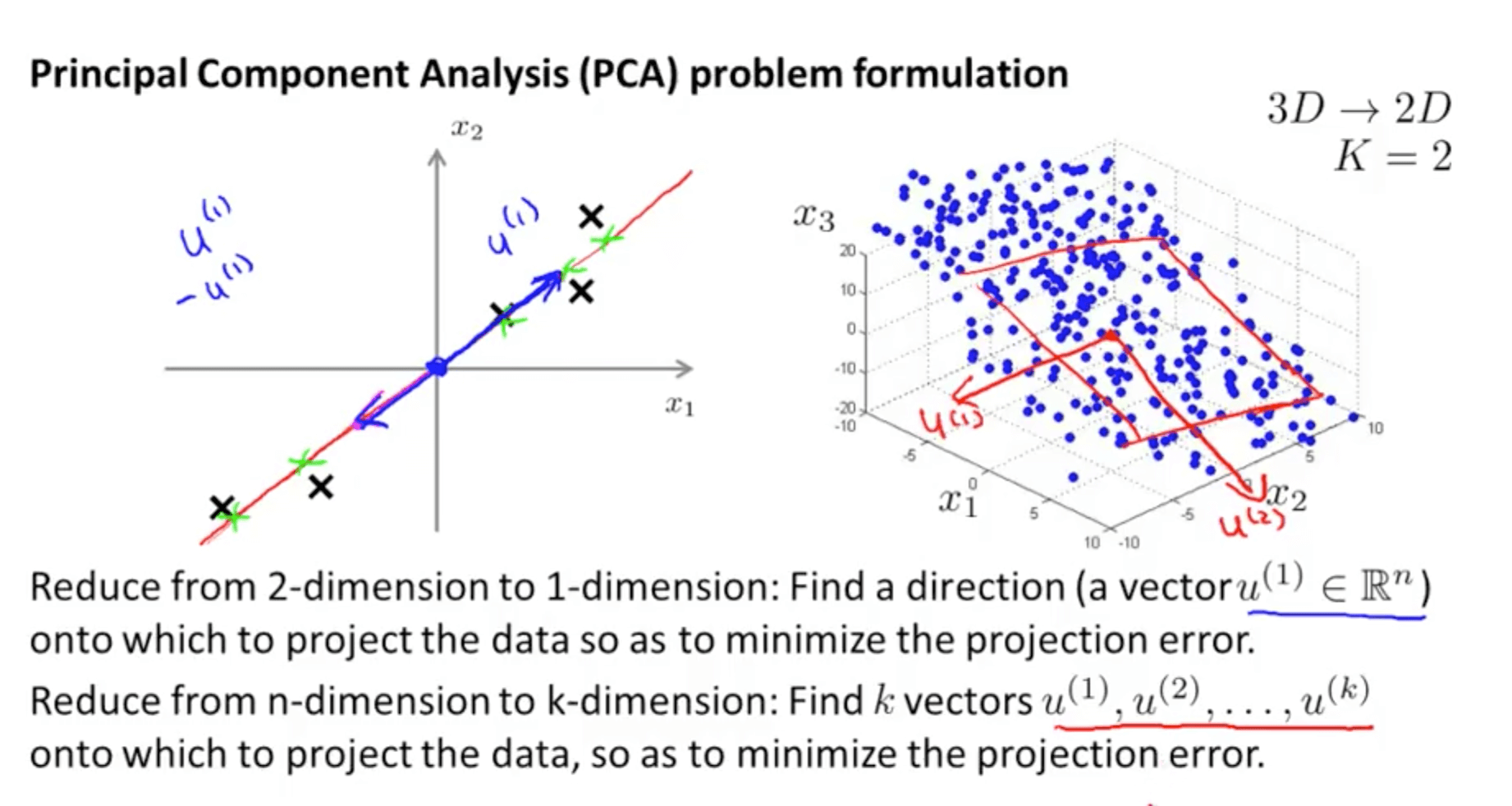

Applying pca with principal components = 2. So, if the input dimensions are too high, then using pca to speed up the algorithm is a reasonable. Let’s assume you have a dataset x which is a matrix of size nxm, so you have n samples of m dimensions. An applied introduction to using principal component analysis in machine learning.

Machine Learning cơ bản In image datasets, each pixel is considered a feature. It was mentioned that pca, which is a very useful method despite the loss of information, reduces the dimensionality reduction and features values. The principal component analysis (pca) is one of the most basic and useful methods for this purpose. Machine learning models) principal component analysis. An applied introduction to using.

An overview of Principal Component Analysis From sklearn.datasets import load_breast_cancer breast_cancer = load_breast_cancer() Thanks for the a2a as mentioned by other people pca stands for principal component analysis. Principal component analysis or pca is a widely used technique for dimensionality reduction of the large data set. Pca can help resize an image. The covariance matrix is a symmetric matrix with rows and columns equal to the.

PCA Machine Learning algorithm YouTube Thanks for the a2a as mentioned by other people pca stands for principal component analysis. Pca is not a feature selection algorithm even. In the following graph, you can see that first principal component (pc) accounts for 70%, second pc accounts for 20% and so on. The principal component analysis (pca) is one of the most basic and useful methods.

for Machine Learning”)

A Guide to Principal Component Analysis (PCA) for Machine Learning The covariance matrix is a symmetric matrix with rows and columns equal to the number of dimensions in the data. Principal component analysis, or pca, is a dimensionality reduction method that is used to diminish the dimensionality of large datasets by converting a huge quantity of variables into a small one and keeping most of the information preserved. Besides using.

machine learning target in cluster analisys (PCA) Data Science Pca is the most widely used tool in exploratory data analysis and in machine learning for predictive models. The principal component analysis (pca) is one of the most basic and useful methods for this purpose. Introduction to principal component analysis (pca) pca — primary component analysis — is one of those statistical algorithms that is popular among data scientists and.

PCA Training vs Testing Solution Intro to Machine Learning YouTube Machine learning algorithms converge faster when trained on principal components instead of the original dataset. Subtract the mean of each variable; Pca is based on linear algebra, which is computationally easy to solve by computers. In image datasets, each pixel is considered a feature. Principal component analysis, or pca, is a dimensionality reduction method that is used to diminish the.

Dimensionality Reduction With Kernel PCA Let’s assume you have a dataset x which is a matrix of size nxm, so you have n samples of m dimensions. It is one of the most widely used dimension reduction techniques to transform the larger dataset into a smaller dataset by identifying the correlations and patterns with preserving most of the valuable information. It was mentioned that pca,.

PCA and SVM for Machine Learning YouTube Principal component analysis (pca) is an unsupervised dimensionality reduction technique. Principal component analysis(pca) is a popular unsupervised machine learning technique which is used for reducing the number of input variables in the training dataset. It is used to reduce the number of dimensions in healthcare data. For example, by reducing the dimensionality of the data, pca enables us to better.

Machine Learning Koios Let’s assume you have a dataset x which is a matrix of size nxm, so you have n samples of m dimensions. Pca linearly transforms the data into a space that highlights the importance of each new feature, thus allowing us to prune the ones that doesn�t reveal much. Pca helps in identifying relationships among different variables & then coupling.

PCA(主成分分析)的理解与应用 知乎 Pca is an unsupervised approach to do linear transformation on your data. The principal component analysis (pca) is one of the most basic and useful methods for this purpose. The benefits of pca (principal component analysis) pca is an unsupervised learning technique that offers a number of benefits. Pca involves the transformation of variables in the dataset into a new.

Christmas Carol and other eigenvectors For example, by reducing the dimensionality of the data, pca enables us to better generalize machine learning models. It is used to reduce the number of dimensions in healthcare data. For this article, i am going to demonstrate pca using the classic breast cancer dataset available from sklearn: Pca is the most widely used tool in exploratory data analysis and.

Principal Component Analysis in Machine Learning EXPLAINED!!! ( PCA In other words, there are 1281283 = 49152 features for a 128x128 rgb (3 channel) image. In machine learning, pca is an unsupervised machine learning algorithm. Pca is an unsupervised approach to do linear transformation on your data. The covariance matrix is a symmetric matrix with rows and columns equal to the number of dimensions in the data. It can.

Machine Learning cơ bản It can be used in finance to analyze stock data and forecast returns. It was mentioned that pca, which is a very useful method despite the loss of information, reduces the dimensionality reduction and features values. The benefits of pca (principal component analysis) pca is an unsupervised learning technique that offers a number of benefits. Perhaps the most popular use.

Principal Component Analysis (PCA) with Scikitlearn by Rukshan Pca is a very common way to speed up your machine learning algorithm by getting rid of correlated variables which don’t contribute in any decision making. Pca linearly transforms the data into a space that highlights the importance of each new feature, thus allowing us to prune the ones that doesn�t reveal much. The covariance matrix is a symmetric matrix.

Machine Learning cơ bản Applications of pca in machine learning pca is used to visualize multidimensional data. Perhaps the most popular use of principal component analysis is dimensionality reduction. It is one of the most widely used dimension reduction techniques to transform the larger dataset into a smaller dataset by identifying the correlations and patterns with preserving most of the valuable information. Principal component.

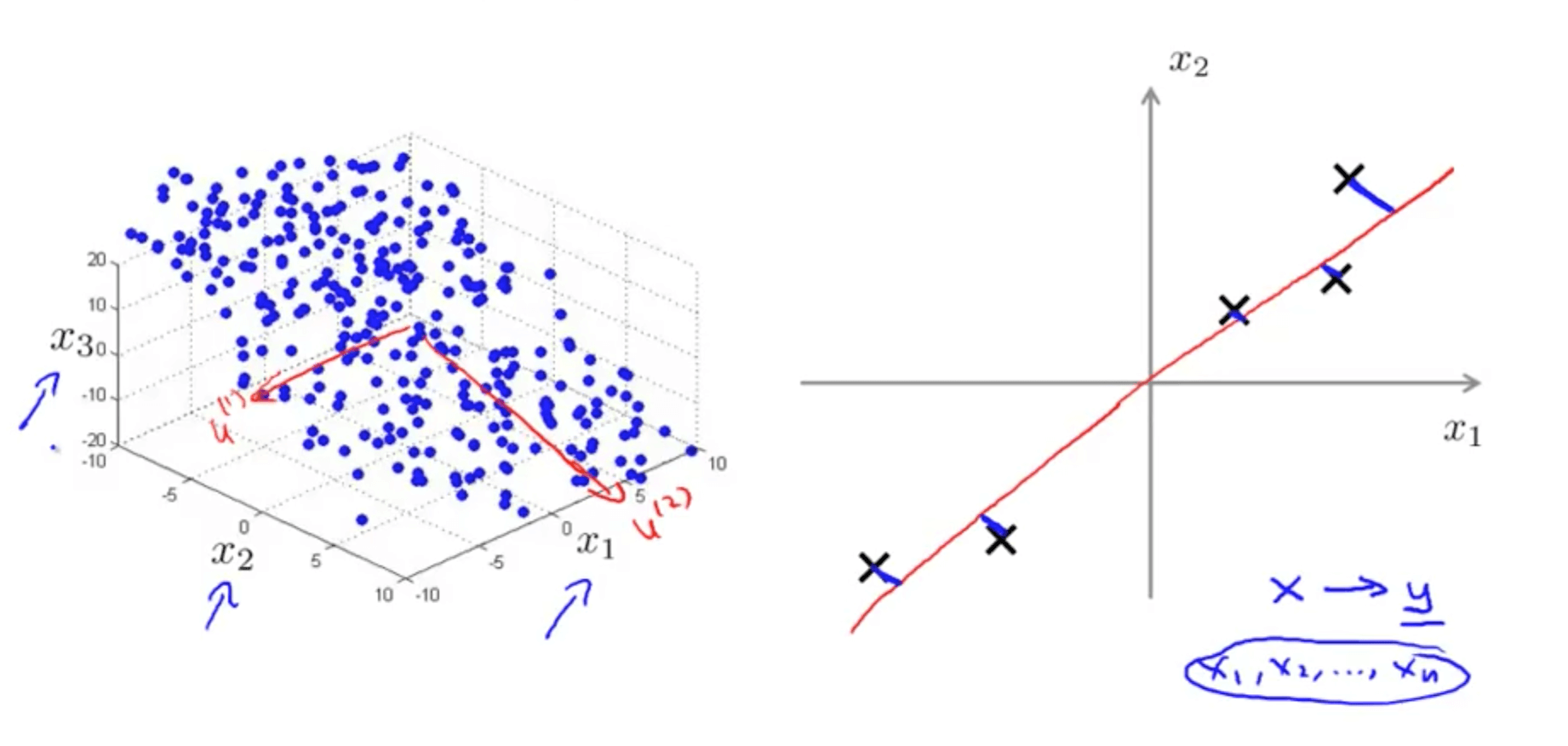

PCA 与降维 Typically, the main purpose of pca is dimension reduction. In other words, there are 1281283 = 49152 features for a 128x128 rgb (3 channel) image. Step by step explanation of pca using python with example. Applications of pca in machine learning pca is used to visualize multidimensional data. I’ll try to give you an intuitive explanation.

Machine Learning 29 PCA கணியம் Applications of pca in machine learning pca is used to visualize multidimensional data. For example, by reducing the dimensionality of the data, pca enables us to better generalize machine learning models. Principal component analysis has paved a perfect path for dimension reduction. In machine learning, pca is an unsupervised machine learning algorithm. What is principal component analysis (pca)?

Dimensionality Reduction Machine Learning, Deep Learning, and What is principal component analysis (pca)? Principal component analysis (pca) is a statistical procedure that uses an orthogonal transformation that converts a set of correlated variables to a set of uncorrelated variables. Pca linearly transforms the data into a space that highlights the importance of each new feature, thus allowing us to prune the ones that doesn�t reveal much. In.

PCA Application in Machine Learning Apprentice Journal Medium From sklearn.datasets import load_breast_cancer breast_cancer = load_breast_cancer() The training time of the algorithms reduces significantly with less number of features. Machine learning models) principal component analysis. Reducing the number of components or features costs some accuracy and on the other hand, it makes the large data set simpler, easy to explore and visualize. Pca is based on linear algebra, which.

Understanding Principal Component Analysis LaptrinhX We are using the pca function of sklearn.decomposition module. The training time of the algorithms reduces significantly with less number of features. The variance explained by components decline with each component. Pca involves the transformation of variables in the dataset into a new set of variables which are called pcs (principal components). In other words, there are 1281283 = 49152.

Dimensionality Reduction Machine Learning, Deep Learning, and The variance explained by components decline with each component. In other words, there are 1281283 = 49152 features for a 128x128 rgb (3 channel) image. Principal component analysis or pca is a widely used technique for dimensionality reduction of the large data set. A picture is worth a thousand words. Pca can help resize an image.

Free Online Course Mathematics for Machine Learning PCA from Coursera Machine learning models) principal component analysis. A picture is worth a thousand words. What is principal component analysis (pca)? Let’s assume you have a dataset x which is a matrix of size nxm, so you have n samples of m dimensions. Principal component analysis has paved a perfect path for dimension reduction.

PCA clearly explained — How, when, why to use it and feature importance It is used to reduce the number of dimensions in healthcare data. Pca is a very common way to speed up your machine learning algorithm by getting rid of correlated variables which don’t contribute in any decision making. The goal of pca is to identify patterns in a data set, and then filter out the variables to. This helps us.

Machine Learning cơ bản A picture is worth a thousand words. The idea is the following: Pca can be performed in 6 steps: Principal component analysis has paved a perfect path for dimension reduction. Pca is an unsupervised approach to do linear transformation on your data.

Pca Machine Learning Wiki MOCHINV Principal component analysis or pca is a widely used technique for dimensionality reduction of the large data set. Besides using pca as a data preparation technique, we can also use it to help visualize data. Perhaps the most popular use of principal component analysis is dimensionality reduction. Pca linearly transforms the data into a space that highlights the importance of.

Pca is a very common way to speed up your machine learning algorithm by getting rid of correlated variables which don’t contribute in any decision making. Pca Machine Learning Wiki MOCHINV.

The covariance matrix is a symmetric matrix with rows and columns equal to the number of dimensions in the data. Moreover, pca is an unsupervised statistical technique used. For example, by reducing the dimensionality of the data, pca enables us to better generalize machine learning models. Principal component analysis has paved a perfect path for dimension reduction. Pca can be performed in 6 steps: An applied introduction to using principal component analysis in machine learning.

Principal component analysis (pca) is an unsupervised machine learning technique. The variance explained by components decline with each component. Pca involves the transformation of variables in the dataset into a new set of variables which are called pcs (principal components). Pca Machine Learning Wiki MOCHINV, The training time of the algorithms reduces significantly with less number of features.