Pca is a linear algorithm. This could be a data structure, such as a class in java or c++, or a struct, for example, in c.

Pca Algorithm Example, This is done by subtracting the respective means from the numbers in the respective column. While much of the data comes from a healthy engine, the sensors have also captured data from the engine when it needs maintenance.

We then apply the svd. Pca is a linear algorithm. From sklearn.decomposition import pca # make an instance of the model pca = pca(.95) fit pca on training set. What will be the value or.

(PDF) 2D3D face recognition method based on a modified CCAPCA algorithm Examples for pca this example shows how to identify principal components in the customer_churn data set, store them in the pca model, and apply this model to additional data. If we rank the eigenvalues in descending order, we get λ1>λ2, which means that the eigenvector that corresponds to the first principal component (pc1) is v1 and the one that corresponds.

Performance improvement for face recognition using PCA and two Calculate eigenvectors and corresponding eigenvalues. What will be the value or. Transform the original n dimensional data points into k dimensions. Principal component analysis (pca) is a statistical procedure that uses an orthogonal transformation that converts a set of correlated variables to a set of uncorrelated variables. Calculate the covariance matrix x of data points.

PCA Algorithm to Obtain Feature Vectors. Download Scientific Diagram Basis vectors = the eigenvectors of σ • larger eigenvalue ⇒ more important eigenvectors. How principal component analysis, pca works. The kaggle campus recruitment dataset is used. This is done by subtracting the respective means from the numbers in the respective column. For our example (slope of line = 0.25), we can calculate as follows:

The block diagram of WBDPCA filter Download Scientific Diagram Basis vectors = the eigenvectors of σ • larger eigenvalue ⇒ more important eigenvectors. First step is to normalize the data that we have so that pca works properly. Examples for pca this example shows how to identify principal components in the customer_churn data set, store them in the pca model, and apply this model to additional data. Sum of.

(PDF) Face Recognition and Identification based On PCA algorithm and QR This could be a data structure, such as a class in java or c++, or a struct, for example, in c. Transform the original n dimensional data points into k dimensions. This produces a dataset whose mean is zero. Sum of eigenvalues () and () = 1.28403 + 0.0490834 = 1.33 = total variance {majority of variance comes from }.

Cluster representation of the reduced features from the PCA algorithm It uses matrix operations from statistics and algebra to find the dimensions that contribute the most to the variance of the data. Examples for pca this example shows how to identify principal components in the customer_churn data set, store them in the pca model, and apply this model to additional data. The goal is to predict the salary. First step.

Flow chart of the algorithm used for analysis, PCA Principal Component Since pca�s main idea is dimensionality reduction, you can leverage that to speed up your machine learning algorithm�s training and testing time considering your data has a lot of features,. The singular values are 25, 6.0, 3.4, 1.9. ∑ = = m i. Pca (principal component analysis) algorithm basically takes sample images as an input and calculates the face vector.

Flowchart of the alternatingdirections algorithm for solving Robust Here are the steps followed for performing pca: You have a dataset that includes measurements for different sensors on an engine (temperatures, pressures, emissions, and so on). Scaling the best fit line down to 1 unit this 1 unit length best fit line is. Algorithms to calculate (build) pca models. The kaggle campus recruitment dataset is used.

Acquisition process of 3PCA algorithm. Download Scientific Diagram What will be the value or. These algorithms are a reflection of how pca has been used in different disciplines: Pca is the most widely used tool in exploratory data analysis and in machine learning for predictive models. Moreover, pca is an unsupervised statistical technique used. How pca can avoid overfitting in a classifier due to high dimensional dataset.

Annual mean OMI SO 2 VCDs from PCA algorithm (column 1), mean OMI SO 2 With these 32 components we are able to express 98.7% of the variance. We then apply the svd. Calculate eigenvectors and corresponding eigenvalues. Hence, reducing the training time. Examples for pca this example shows how to identify principal components in the customer_churn data set, store them in the pca model, and apply this model to additional data.

An example of Robust PCA algorithm on the Extended Yale B Face If we rank the eigenvalues in descending order, we get λ1>λ2, which means that the eigenvector that corresponds to the first principal component (pc1) is v1 and the one that corresponds to the. Pca (principal component analysis) algorithm basically takes sample images as an input and calculates the face vector for each input image. For example, a 28 x 28.

Is PCA a deterministic algorithm? Quora We can draw a line from (0,0) to (4,3) which will represent the direction of the vector. Whoever tried to build machine learning models with many features would already know the glims about the concept of principal component analysis. While much of the data comes from a healthy engine, the sensors have also captured data from the engine when it.

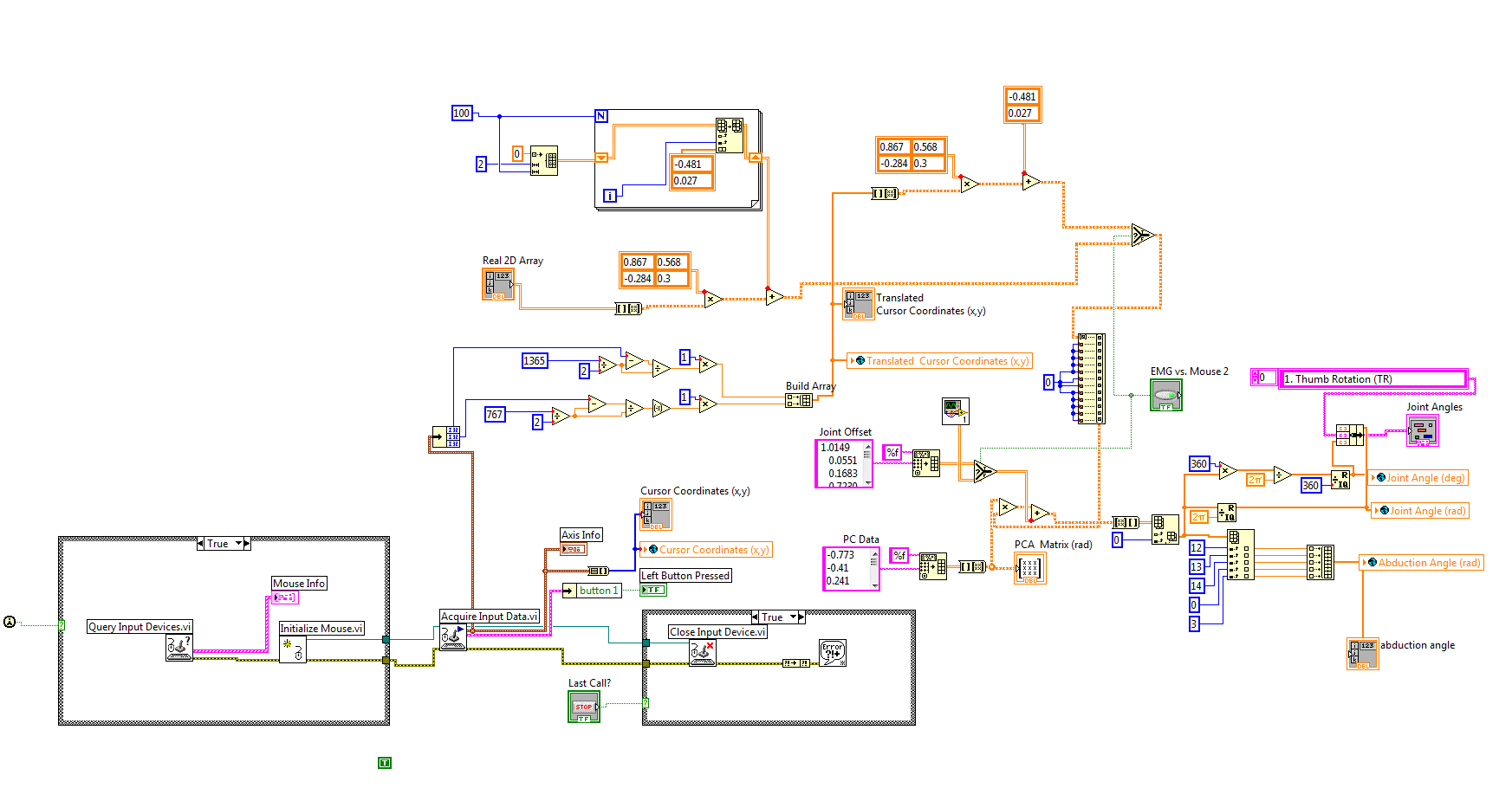

Design of a Myoelectric Prosthetic Hand Controller Based on Principal For our example (slope of line = 0.25), we can calculate as follows: Pca (principal component analysis) algorithm basically takes sample images as an input and calculates the face vector for each input image. In this case, 95% of the variance amounts to 330 principal. Pca is a linear algorithm. How principal component analysis, pca works.

(a) The PCA function in Algorithm. 1 for a particular layer showing You can find out how many components pca choose after fitting the model using pca.n_components_. Here are the steps followed for performing pca: To print pca models, use the print_model stored procedure. You have a dataset that includes measurements for different sensors on an engine (temperatures, pressures, emissions, and so on). Principal component analysis (pca) is a machine learning algorithm.

![[PDF] Human Face Recognition based on Improved PCA Algorithm Semantic [PDF] Human Face Recognition based on Improved PCA Algorithm Semantic](https://i2.wp.com/d3i71xaburhd42.cloudfront.net/ada4901e0022b4fdeb9ec3ae26b986199f7ae3be/4-Figure2-1.png)

[PDF] Human Face Recognition based on Improved PCA Algorithm Semantic To print pca models, use the print_model stored procedure. Sort the eigen vectors according to their eigenvalues in decreasing order. Whoever tried to build machine learning models with many features would already know the glims about the concept of principal component analysis. Since pca�s main idea is dimensionality reduction, you can leverage that to speed up your machine learning algorithm�s.

GPbased PCA algorithm parameters Download Table Calculate the covariance matrix x of data points. Choose first k eigenvectors and that will be the new k dimensions. The different algorithms used to build a pca model provide a different insight into the model’s structure and how to interpret it. Pca is called by different names in each area. This is done by subtracting the respective means from.

face recognition using pca algorithm in matlab YouTube To print pca models, use the print_model stored procedure. The pca algorithm is basically a sequence of operations (no loops or optimizations) using the functions listed in the previous section. Transform the original n dimensional data points into k dimensions. The algorithm shown takes as input the modeling data in some representation. Choose first k eigenvectors and that will be.

a Block flow diagram of PCA methodology. b Algorithm chat of PCA data The pca algorithm is implemented in the pca and project_pca stored procedures. Sort the eigen vectors according to their eigenvalues in decreasing order. While much of the data comes from a healthy engine, the sensors have also captured data from the engine when it needs maintenance. Pca is the most widely used tool in exploratory data analysis and in machine.

PCA algorithm using MATLAB التصنيف باستخدام PCA في الماتلاب YouTube It uses matrix operations from statistics and algebra to find the dimensions that contribute the most to the variance of the data. While much of the data comes from a healthy engine, the sensors have also captured data from the engine when it needs maintenance. The kaggle campus recruitment dataset is used. In this post, i want to give an.

PCA algorithm using MATLAB 2 التصنيف باستخدام PCA في الماتلاب YouTube The pca algorithm is basically a sequence of operations (no loops or optimizations) using the functions listed in the previous section. In this case, 95% of the variance amounts to 330 principal. Now divide the elements of the x matrix by the number 1.3602 (just found that) so now we found the eigenvectors for the eigenvector \lambda_2, they are 0.735176.

How the edge PCA algorithm works. (a) For every edge of the tree, the Since pca�s main idea is dimensionality reduction, you can leverage that to speed up your machine learning algorithm�s training and testing time considering your data has a lot of features,. What will be the value or. You have a dataset that includes measurements for different sensors on an engine (temperatures, pressures, emissions, and so on). The inclusion of more features.

PCA using the NIPALS algorithm (Dray and Dufour 2007). Top left Trait Performing principal component analysis (pca) we first find the mean vector xm and the variation of the data (corresponds to the variance) we subtract the mean from the data values. Basis vectors = the eigenvectors of σ • larger eigenvalue ⇒ more important eigenvectors. Here is the screenshot of the data used. First step is to normalize the data that.

![[PDF] Human Face Recognition based on Improved PCA Algorithm Semantic [PDF] Human Face Recognition based on Improved PCA Algorithm Semantic](https://i2.wp.com/d3i71xaburhd42.cloudfront.net/ada4901e0022b4fdeb9ec3ae26b986199f7ae3be/4-Figure1-1.png)

[PDF] Human Face Recognition based on Improved PCA Algorithm Semantic How pca can improve the speed of the training process. Hence, reducing the training time. Pca is a linear algorithm. The singular values are 25, 6.0, 3.4, 1.9. The pca algorithm is basically a sequence of operations (no loops or optimizations) using the functions listed in the previous section.

Flowchart of the PCAbased inversion algorithm. Download Scientific It uses matrix operations from statistics and algebra to find the dimensions that contribute the most to the variance of the data. Inverse transforming the pca output and reshaping for visualization using imshow: You have a dataset that includes measurements for different sensors on an engine (temperatures, pressures, emissions, and so on). 1 () m t ii. Here are the.

Framework of the fast independent component analysis (ICA) algorithm 1 () m t ii. ∑ = = m i. Pca is called by different names in each area. The pca algorithm is implemented in the pca and project_pca stored procedures. Examples for pca this example shows how to identify principal components in the customer_churn data set, store them in the pca model, and apply this model to additional data.

Pca (principal component analysis) algorithm basically takes sample images as an input and calculates the face vector for each input image. Framework of the fast independent component analysis (ICA) algorithm.

Scaling the best fit line down to 1 unit this 1 unit length best fit line is. Transform the original n dimensional data points into k dimensions. Here are the steps followed for performing pca: M}, compute the sample covariance matrix. Algorithms to calculate (build) pca models. Examples for pca this example shows how to identify principal components in the customer_churn data set, store them in the pca model, and apply this model to additional data.

You can find out how many components pca choose after fitting the model using pca.n_components_. Pca = pca(32).fit(img_r) img_transformed = pca.transform(img_r) print(img_transformed.shape) print(np.sum(pca.explained_variance_ratio_) ) output: Algorithms to calculate (build) pca models. *Framework of the fast independent component analysis (ICA) algorithm*, Calculate the covariance matrix x of data points.