Knn is a nonlinear learning algorithm. In the image, the model is depicted as a line drawn between the points.

Knn Algorithm In Machine Learning With Example, The library provides a simple interface to make use of knn in your own sketches: Some of these use cases include:

Introductory text to a couple of commonly used machine learning techniques and how they are performed in r. The library provides a simple interface to make use of knn in your own sketches: K = 30 knn = kneighborsclassifier(n_neighbors=k) knn.fit(x_train, y_train) preds = knn.predict(x_test) evaluating the knn model read the following post to learn more about evaluating a machine learning model. Predicting stockmarket going up or.

Knn Algorithm In Machine Learning Definition machinei Knn is a nonlinear learning algorithm. A fantastic application of this is the use of knn in collaborative filtering algorithms for recommender systems. In this example, if we assume k=4. First, import the iris dataset as follows −. For example, if the five closest neighbours had values of [100, 105, 95, 100, 110], then the algorithm would return a value.

Knn Classifier, Introduction to KNearest Neighbor Algorithm • either by taking the reciprocal (inverse) of. Using clickstream data from websites, the knn. • votes close to the query get a higher relevance. K = 30 knn = kneighborsclassifier(n_neighbors=k) knn.fit(x_train, y_train) preds = knn.predict(x_test) evaluating the knn model read the following post to learn more about evaluating a machine learning model. The points in your data are then.

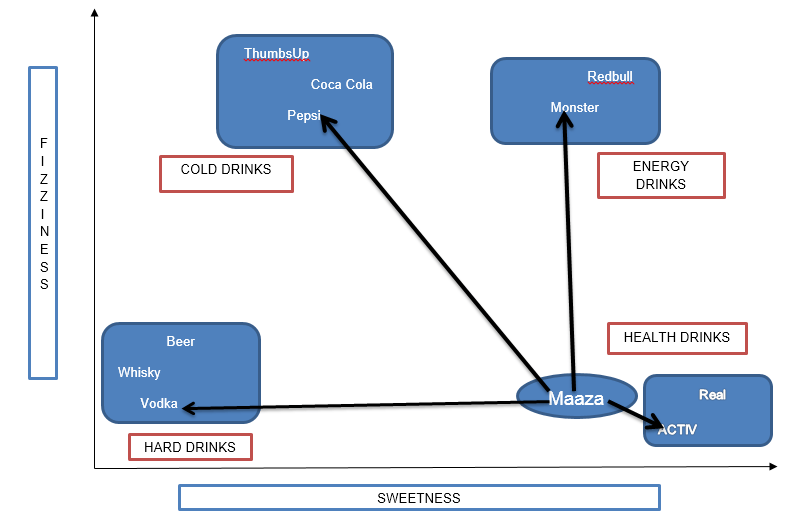

A Short Introduction to KNearest Neighbors Algorithm If we have a data set when plotted looks like this, to classify these data points k nearest neighbours algorithm will first identify the distance between points and see if they are similar or not. 1) knn is a perfect first step for machine learning beginners as it is very easy to explain, simple to understand, and extremely powerful. For.

KNN Algorithm in Machine Learning Now, we need to classify new data point with black dot (at point 60,60) into blue or red class. It’s a simple algorithm that stores all available cases and classifies any new cases by taking a majority vote of its k neighbors. Predicting stockmarket going up or. If the value of k is 5 it will look for 5 nearest.

KNearest Neighbors (KNN) Algorithm for Machine Learning Knn finds out about the 4 nearest neighbors. Introductory text to a couple of commonly used machine learning techniques and how they are performed in r. #include <arduino_<strong>knn</strong>.h> // create a new knnclassifier knnclassifier myknn (inputs); Knn is a nonlinear learning algorithm. This video explains knn with a very simple example.

Introduction to KNN, KNearest Neighbors Simplified The following is an example to understand the concept of k and working of knn algorithm −. Choosing a k will affect what class a new point will be assigned to. It yields highly competitive results, despite its simplicity. If the value of k is 5 it will look for 5 nearest neighbors to that data point. We are assuming.

KNN visualization in just 13 lines of code by Deepthi A • either by taking the reciprocal (inverse) of. A fantastic application of this is the use of knn in collaborative filtering algorithms for recommender systems. In regression problems, the knn algorithm will predict a new data point’s continuous value by returning the average of the k neighbours’ values. Linear models are models that predict using lines or hyperplanes. The prediction.

Different machine learning algorithms. (A) knearest Now, we need to classify new data point with black dot (at point 60,60) into blue or red class. We are assuming k = 3 i.e. • votes close to the query get a higher relevance. It’s a simple algorithm that stores all available cases and classifies any new cases by taking a majority vote of its k neighbors. Suppose.

Engine with kNN Algorithm Machine We are assuming k = 3 i.e. The points in your data are then sorted by increasing distance from x. Datasets frequently have missing values, but the knn algorithm can estimate for those values in a process known as missing data imputation. A fantastic application of this is the use of knn in collaborative filtering algorithms for recommender systems. Now,.

Writing a Machine Learning Classifier KNearest Neighbors A fantastic application of this is the use of knn in collaborative filtering algorithms for recommender systems. From sklearn.neighbors import kneighborsclassifier # instantiate learning model (k = 3) classifier = kneighborsclassifier (n_neighbors = 3) # fitting the model classifier.fit (x_train, y_train) at last we need to make prediction. In this example, if we assume k=4. Some of these use cases.

Model evaluation, model selection, and algorithm selection • counterbalance is provided by using distance weighted k nearest neighbour approach. The knn, k nearest neighbours, algorithm is an algorithm that can be used for both unsupervised and supervised learning. From sklearn.neighbors import kneighborsclassifier # instantiate learning model (k = 3) classifier = kneighborsclassifier (n_neighbors = 3) # fitting the model classifier.fit (x_train, y_train) at last we need to.

KNearest Neighbors (KNN) Algorithm for Machine Learning To calculate the distance the attribute values must be real numbers. This video explains knn with a very simple example. It’s a simple algorithm that stores all available cases and classifies any new cases by taking a majority vote of its k neighbors. In the image, the model is depicted as a line drawn between the points. The following is.

An Introduction to kNearest Neighbors in Machine Learning In this case, you’ll classify the data point in class 2 as the number of circles surrounding the point is higher than rectangles. The following is an example to understand the concept of k and working of knn algorithm −. For example, to improve the accuracy, we can apply the weighted knn algorithm. First, import the iris dataset as follows.

Machine Learning Basics with Examples — Part 2 Supervised A second property that makes a big difference in machine learning algorithms is whether or not the models can estimate nonlinear relationships. Datasets frequently have missing values, but the knn algorithm can estimate for those values in a process known as missing data imputation. Now, we need to classify new data point with black dot (at point 60,60) into blue.

Pragmatic Programming Techniques Predictive Analytics The example sketch makes use of the arduino_knn library. It means this algorithm have to check for two nearest data point from this new data point. Apparently, within the data science industry, it�s more widely used to solve classification problems. There are many workarounds for many of these disadvantages, which makes knn a powerful classification tool. Knn algorithm can be.

Machine Learning in R for beginners Machine learning But in our case, the dataset set contains the categorical values. Predicting stockmarket going up or. The same applies here, knn algorithm works on the assumption that similar things exist in close proximity, simply we can put into the same things stay close to each other. K = how many nearest point you are checking in. Introductory text to a.

Machine Learning KNearest Neighbors (KNN) Algorithm In Choosing a k will affect what class a new point will be assigned to. The following is an example to understand the concept of k and working of knn algorithm −. It’s easy to implement and understand, but has a major drawback of becoming significantly slows as the size of that data in use grows. 1) knn is a perfect.

StatQuest Knearest neighbors, Clearly Explained YouTube In this case, you’ll classify the data point in class 2 as the number of circles surrounding the point is higher than rectangles. From sklearn.neighbors import kneighborsclassifier # instantiate learning model (k = 3) classifier = kneighborsclassifier (n_neighbors = 3) # fitting the model classifier.fit (x_train, y_train) at last we need to make prediction. In regression problems, the knn algorithm.

KNN — Kth Nearest Neighbour Algorithm include Medium A second property that makes a big difference in machine learning algorithms is whether or not the models can estimate nonlinear relationships. It means this algorithm have to check for one nearest data point from this new datapoint. It belongs to the supervised learning domain and finds intense application in pattern recognition, data mining and intrusion detection. It would find.

KNearest Neighbor Algorithm Machine Learning Basics It means this algorithm have to check for one nearest data point from this new datapoint. From sklearn.datasets import load_iris iris = load_iris() Now, we need to classify new data point with black dot (at point 60,60) into blue or red class. Some of these use cases include: In this case, you’ll classify the data point in class 2 as.

KNearest Neighbors (KNN) Algorithm for Machine Learning To calculate the distance the attribute values must be real numbers. It means this algorithm have to check for one nearest data point from this new datapoint. • either by taking the reciprocal (inverse) of. Consider you enter k as 4 in this example. It belongs to the supervised learning domain and finds intense application in pattern recognition, data mining.

KNN The K Nearest Neighbour Machine Learning Algorithm From sklearn.neighbors import kneighborsclassifier # instantiate learning model (k = 3) classifier = kneighborsclassifier (n_neighbors = 3) # fitting the model classifier.fit (x_train, y_train) at last we need to make prediction. There are many workarounds for many of these disadvantages, which makes knn a powerful classification tool. This video explains knn with a very simple example. First, import the iris.

KNN Algorithm KNN In R KNN Algorithm Example In the example below, choosing a k value of 2 will assign unknown point (black circle) to class 2. Apparently, within the data science industry, it�s more widely used to solve classification problems. Suppose we have a dataset which can be plotted as follows −. Next, train the model with the help of kneighborsclassifier class of sklearn as follows: The.

What is KNN in Machine Learning? Web, Design, SEO Choosing a k will affect what class a new point will be assigned to. K denotes the number of nearest neighbour points the algorithm will consider. After that it will decide which class this new point will belong to. • counterbalance is provided by using distance weighted k nearest neighbour approach. If we have a data set when plotted looks.

![[Machine Learning] how KNN algorithm works (knearest [Machine Learning] how KNN algorithm works (knearest](https://i2.wp.com/i.ytimg.com/vi/JPmFVVWCdAc/maxresdefault.jpg)

[Machine Learning] how KNN algorithm works (knearest There are many workarounds for many of these disadvantages, which makes knn a powerful classification tool. In this case, you’ll classify the data point in class 2 as the number of circles surrounding the point is higher than rectangles. The example sketch makes use of the arduino_knn library. • counterbalance is provided by using distance weighted k nearest neighbour approach..

There are many workarounds for many of these disadvantages, which makes knn a powerful classification tool. [Machine Learning] how KNN algorithm works (knearest.

After that it will decide which class this new point will belong to. From sklearn.datasets import load_iris iris = load_iris() Knn algorithm can be applied to both classification and regression problems. K = how many nearest point you are checking in. • k high for example results in including instances that are very far away from the query instance. The same applies here, knn algorithm works on the assumption that similar things exist in close proximity, simply we can put into the same things stay close to each other.

In knn we have to give the k value. The following is an example to understand the concept of k and working of knn algorithm −. But in our case, the dataset set contains the categorical values. [Machine Learning] how KNN algorithm works (knearest, Knn = kneighborsclassifier(n_neighbors=26) knn.fit(x, y) y_test_hat =knn.