Mount your google drive to colab; Our kernel may terminate for no reason.

Google Colab Training Time, We will resample one point per hour since no drastic change is expected within 60 minutes. Here are 10 tips and tricks i gathered over time that will help you to get the most out of google colab.

A training loop feeds the dataset examples into the model to help it make better predictions. I am new to google colab and i don�t know how to fix this. All this can be done in 3 lines of code that run in approximately 20 seconds (for this particular dataset): There are 8000 training samples and 2000 testing samples.

How we use Google BigQuery, Cloud and Colab for Calibration These tips are based on training yolo using darknet, but i�ll try to generalize. The time taken for 1 epoch is 12hrs. Our kernel may terminate for no reason. Copy the zip file from drive to colab. This is a large computation for google colab.

Speed up your image training on Google Colab by Oliver Müller There are some points in your training: When i compared my model’s training time using colab to another students’ training time on their local machine, colab could train a. These tips are based on training yolo using darknet, but i�ll try to generalize. Mount your google drive to colab; The time taken for 1 epoch is 12hrs.

Google Colab for GPU based ML model training Free Cloud platform According to google’s team behind colab’s free tpu: Kaggle had its gpu chip upgraded from k80 to an nvidia tesla p100. With colab pro+ you have even more stability in your connection. Using google colab’s extensions to save to google drive, present interactive display for pandas dataframe, etc. To train a 22khz talknet, run the cells below and follow the.

Training YOLO model without GPU Google colab Helmet detection In the version of colab that is free of charge, notebooks can run for at most 12 hours, and idle timeouts are much stricter than in colab pro or pro+. Google colab allows you to save models and. Resnet 101 is a very deep model and in takes too much memory and power to be trained. None of these however.

Bytepawn Marton Trencseni Training a Pytorch Lightning MNIST GAN on Remove the zip file to free up space (in colab. Second, establish an ssh connection from your local machine to the. Here we are picking ~300,000 data points for training. More technically, colab is a hosted jupyter notebook service that requires no setup to use, while. To train a 22khz talknet, run the cells below and follow the instructions.

Training AlphaZero in Google Colab by Jye SawtellRickson Towards This is a large computation for google colab. Colab gives the user an execution time of a total of 12 hours. As mentioned earlier, the model is trained on the pneumonia dataset. Speed up training using google colab’s free tier with gpu; Remove the zip file to free up space (in colab.

GitHub Hephyrius/Stylegan2AdaGoogleColabStarterNotebook A colab As mentioned earlier, the model is trained on the pneumonia dataset. Multiply this gradient penalty with a constan t weight factor # 5. Colab gives the user an execution time of a total of 12 hours. Gpus on google colab are shared on different vm instances, so you have only 16gb memory and a limited percentage of its power (currently.

Training Custom Detector with Yolov5 (Google Colab) Part 1 Detector “artificial neural networks based on the ai applications used to train the tpus are 15 and 30 times faster than cpus and gpus!”. I am using gpu as the hardware acceleration and i thought that having 1xtesla k80 would take less than 5 min but it is taking too much time. This is a large computation for google colab. Connect.

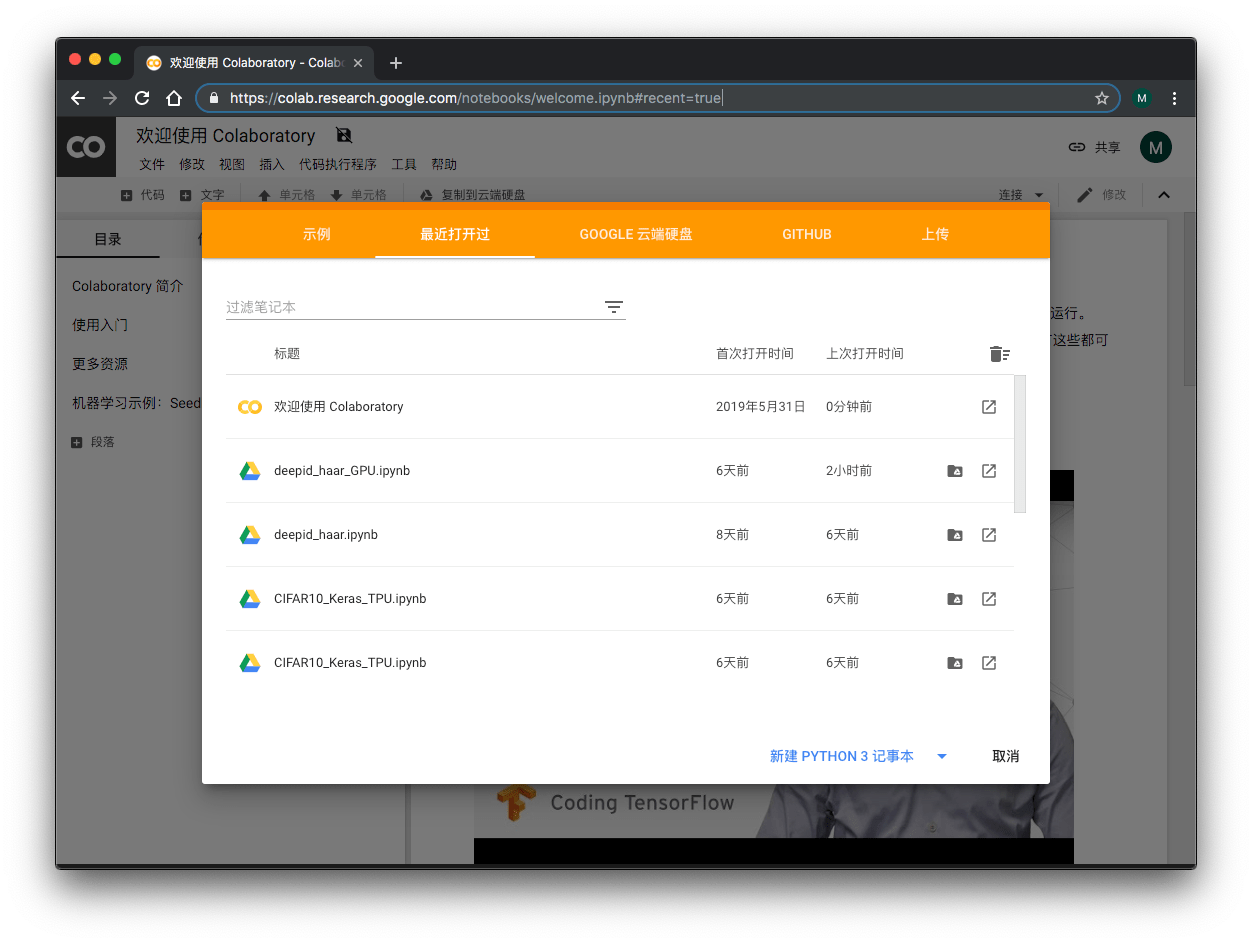

Training DeepID1 Network for Face Comparison with Google Colab Speed up training using google colab’s free tier with gpu; To train a 22khz talknet, run the cells below and follow the instructions. In the version of colab that is free of charge, notebooks can run for at most 12 hours, and idle timeouts are much stricter than in colab pro or pro+. Every session needs authentication every time. Unzip.

Speech to Text TrainingInferencing with Custom Data in DeepSpeech I am using gpu as the hardware acceleration and i thought that having 1xtesla k80 would take less than 5 min but it is taking too much time. We will resample one point per hour since no drastic change is expected within 60 minutes. The original pape r recommends training When i compared my model’s training time using colab to.

GitHub iamhasibul/RealtimeObjectDetectionusingYOLOv4inGoogle I�m in get to the point mode here, but you can find step by step tutorial, the runnable colab notebook or the github repo. Here we’ll finally compare the differences in training time for free and pro tiers of google colab. The notebook will automatically resume training any models from the last saved checkpoint. Speed up training using google colab’s.

Use your own dataset on Google Colab to do Yolov5 model training This will take a while, and you might have to do it in multiple colab sessions. Now, as soon as we click ‘ok’, it switches to the colab window and maximizes it. A google drive account, with backup and sync enabled; Colab gives the user an execution time of a total of 12 hours. These tips are based on training.

Introduction to Time Series w/ Google Colab YouTube Return the generator and discriminator losses as a loss dictionary # train the discriminator first. None of these however are perfect, with more tuning, more. It is slow compared to colab. This will take a while, and you might have to do it in multiple colab sessions. The original pape r recommends training

(Lesson 1)Google Colab is taking much time to fit the model. Is it Here we are picking ~300,000 data points for training. Our kernel may terminate for no reason. Using google colab’s extensions to save to google drive, present interactive display for pandas dataframe, etc. Add the gradient penalty to the discriminator loss # 6. Every session needs authentication every time.

How to directly download Kaggle dataset to Google Drive by Sasiwut The time taken for 1 epoch is 12hrs. I am using gpu as the hardware acceleration and i thought that having 1xtesla k80 would take less than 5 min but it is taking too much time. According to google’s team behind colab’s free tpu: This approach is convenient if you want to see the execution time of every cell in.

Google Colab 5 Tricks for Getting More Power Analytics Vidhya The time taken for 1 epoch is 12hrs. Gpus on google colab are shared on different vm instances, so you have only 16gb memory and a limited percentage of its power (currently t4 gpu). Using google colab’s extensions to save to google drive, present interactive display for pandas dataframe, etc. Calculate the gradient penalty # 4. Here we’ll finally compare.

Realtime YOLOv4 Object Detection on Webcam in Google Colab Images When i compared my model’s training time using colab to another students’ training time on their local machine, colab could train a. Second, establish an ssh connection from your local machine to the. There are some points in your training: Resnet 101 is a very deep model and in takes too much memory and power to be trained. The article.

Build a SelfDriving RC Car using Raspberry Pi and Machine Learning Train the discriminator and get the discrimin ator loss # 3. These tips are based on training yolo using darknet, but i�ll try to generalize. It’s better to deal with the zip file containing the small files. A google drive account, with backup and sync enabled; I�m in get to the point mode here, but you can find step by.

Training DeepID1 Network for Face Comparison with Google Colab Remove the zip file to free up space (in colab. Let’s see a quick chart to compare training time: To train a 22khz talknet, run the cells below and follow the instructions. If you use these images, skip directly to step 4: In the version of colab that is free of charge, notebooks can run for at most 12 hours,.

Training on Google Colab Amaan Abbasi The article is fully replicable, you can find the notebook here, train your own agent and see for yourself just how amazing the power of alphazero + colab + julia is! Speed up training using google colab’s free tier with gpu; In the version of colab that is free of charge, notebooks can run for at most 12 hours, and.

Hello guys, currently I was working in google colab, training my Colab pro+ also offers background execution which supports continuous code execution for up to 24 hours. Loading the autotime extension (you only need to do this once): Observation is recorded every 10 mins, that means 6 times per hour. This is a large computation for google colab. Remove the zip file to free up space (in colab.

GAN19 Training CGAN di Google Colab Generative Adversarial Network But before we jump into a comparison of tpus vs cpus and gpus and an implementation, let’s define the tpu a bit more specifically. I am using gpu as the hardware acceleration and i thought that having 1xtesla k80 would take less than 5 min but it is taking too much time. With all the pieces in place, the model.

Speed up your image training on Google Colab by Oliver Müller Map your google drive on colab notebooks you can access your google drive as a network mapped drive in the colab vm runtime. We will resample one point per hour since no drastic change is expected within 60 minutes. After every 90 minutes of being. It is slow compared to colab. First, set up your jupyter notebook server using the.

Training on Google Colab YouTube Many users have experienced a lag in kernel. I�m in get to the point mode here, but you can find step by step tutorial, the runnable colab notebook or the github repo. With all the pieces in place, the model is ready for training! Our kernel may terminate for no reason. Resnet 101 is a very deep model and in.

Use Google Colab Training Yolov5 Model Programmer Sought According to google’s team behind colab’s free tpu: With google colab, creating and sharing notebooks is intuitive and simple 😃. Colaboratory, or “colab” for short, is a product from google research. Remove the zip file to free up space (in colab. But before we jump into a comparison of tpus vs cpus and gpus and an implementation, let’s define the.

I am new to google colab and i don�t know how to fix this. Use Google Colab Training Yolov5 Model Programmer Sought.

Calculate the gradient penalty # 4. All this can be done in 3 lines of code that run in approximately 20 seconds (for this particular dataset): This approach is convenient if you want to see the execution time of every cell in a google colab notebook. As mentioned earlier, the model is trained on the pneumonia dataset. The notebook will automatically resume training any models from the last saved checkpoint. If this is the first time to use colab, you might first need to click on “connect more apps” and search for “ colaboratory “, and then follow the above step.

A pgn database with a decent number of games (500k is a good place to start) plenty of free time; A training loop feeds the dataset examples into the model to help it make better predictions. Add the gradient penalty to the discriminator loss # 6. Use Google Colab Training Yolov5 Model Programmer Sought, After every 90 minutes of being.