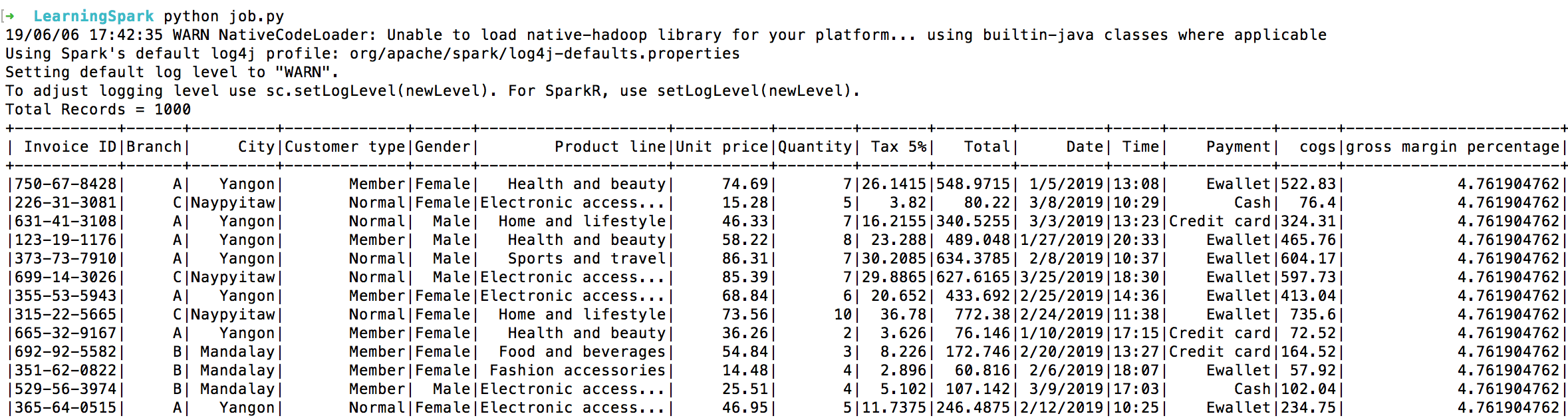

In this pyspark etl project, you will learn to build a data pipeline and perform etl operations by integrating pyspark with apache kafka and aws redshift view project details start project Here’s the updated version of our etl pipeline:

Etl Pipeline Python Example, Extract the zip file and move the csv files for car_sales to your etl_car_sales directory, like this: The standard execution of the function (with the data argument) is however still usable write_to_json(output.json, data=1.464).

Extract all of the fields from the split representation. In this exercise, we’ll only be pulling data once to show how it’s done. Create a virtual environment with the command line mkvirtualenv etl_car_sales. Here’s the updated version of our etl pipeline:

Setting Up ETL Using Python Simplified Learn Hevo Use of lambda expression with apply () #default axis of apply is axis=0 and with this argument it works exactly like map. Only the last line of the etl pipeline needs to change. 2) etl pipelines always involve transformation The full source code for this exercise is here. For that we can create another file, let�s name it main.py, in.

Create your first ETL Pipeline in Apache Spark and Python by Adnan The standard execution of the function (with the data argument) is however still usable write_to_json(output.json, data=1.464). This allows them to customize and control every aspect of the pipeline, but a handmade pipeline also requires more time and effort. Only the last line of the etl pipeline needs to change. Here’s the updated version of our etl pipeline: Unzip the file.

python DAG(directed acyclic graph) dynamic job scheduler Stack Overflow What we want to do with our etl process is: Tmpfile = dealership_temp.tmp # store all extracted data logfile = dealership_logfile.txt # all event logs will be stored targetfile = dealership_transformed_data.csv # transformed data is. Below, we’ll go over 4 of the top python etl frameworks that you should consider. The standard execution of the function (with the data argument).

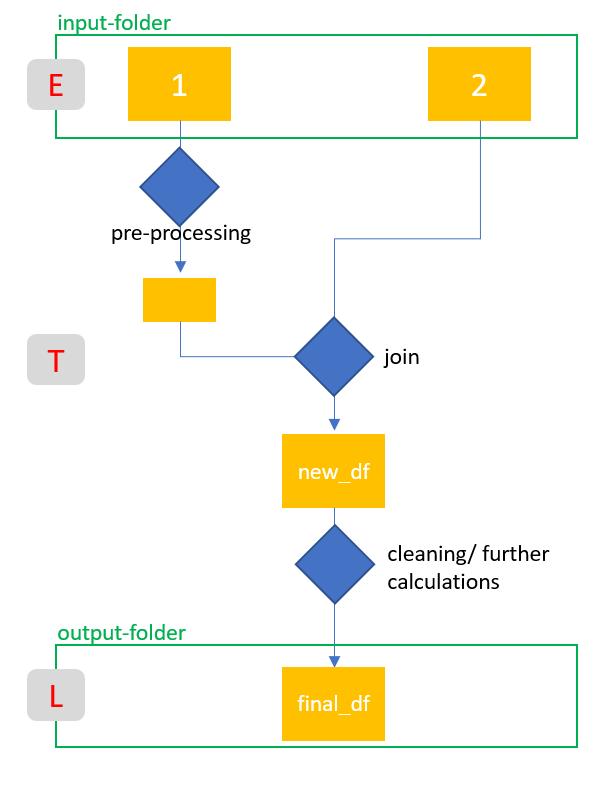

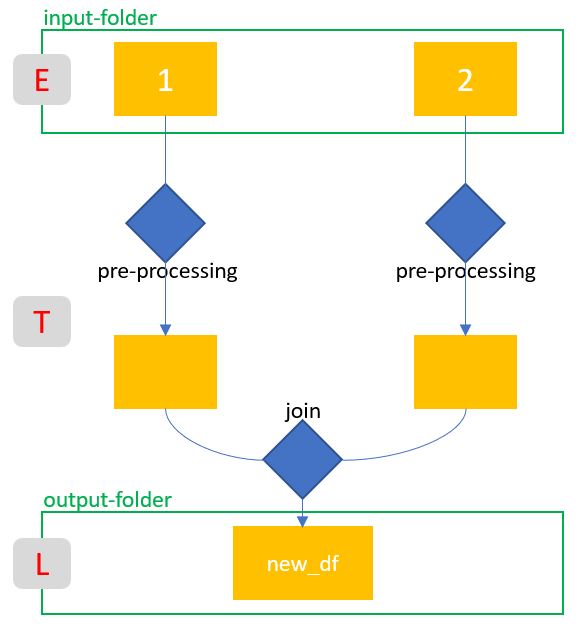

ETL Pipeline with join2 Michael Fuchs Python Data lake with apache spark ⭐ 1. A data pipeline doesn�t always end with the loading. In order to set up the python etl script, follow the steps below: Initialize a created variable that stores when the database record was created. Tmpfile = dealership_temp.tmp # store all extracted data logfile = dealership_logfile.txt # all event logs will be stored targetfile.

Building Serverless ETL Pipelines with AWS Glue The Coral Edge This will enable future pipeline steps to query data. It’s also very straightforward and easy to build a simple pipeline as a python script. The full source code for this exercise is here. Since this project was built for learning purposes and as an example, it functions only for a single scenario and data schema. We will also redefine these.

ETL Pipeline with join Michael Fuchs Python Create a project called etl_car_sales with pycharm. In your etl.py import the following python modules and variables to get started. Parse the xml files obtained in the previous step. The etl pipeline concludes by loading the data into a database or data warehouse. We will also redefine these labels for consistency.

Building a Simple ETL Pipeline with Python and Google Cloud Platform In your etl.py import the following python modules and variables to get started. An etl pipeline consists of three general components: This is a very basic etl pipeline so we will only consider a. To convert a python function to a prefect task, you first need to make the necessary import — from prefect import task, and decorate any function.

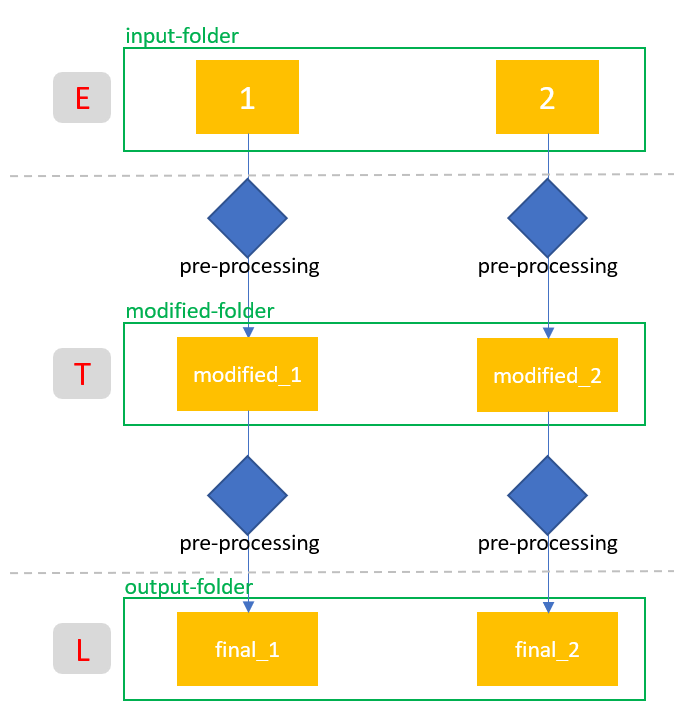

ETL Pipeline with intermediate storage Michael Fuchs Python Here we will have two methods, etl() and etl_process(). Here’s the updated version of our etl pipeline: You can use optional parameter with tag version. Since this project was built for learning purposes and as an example, it functions only for a single scenario and data schema. Etl tools and services allow enterprises to quickly set up a data pipeline.

I built a geospatial ETL pipeline with python and this is what I The project is built in python and it has 2 main parts: Emp_df [‘app_tax’]=emp_df [‘sal’].apply (lambda x:. Create a project called etl_car_sales with pycharm. Only the last line of the etl pipeline needs to change. Extract — get data from a source such as an api.

ETL with a Glue Python Shell Job Load data from S3 to Redshift # extract data from source db source_cursor = source_cnx.cursor() source_cursor.execute(query.extract_query) data = source_cursor.fetchall() source_cursor.close() # load data into warehouse db if data: Create a project called etl_car_sales with pycharm. Data lake with apache spark ⭐ 1. And these mostly use the python scripting. 1) etl pipelines are subset of the data pipeline.

Building an ETL Pipeline in Python by Daniel Foley Towards Data Science For that we can create another file, let�s name it main.py, in this file we will use transformation class object and then run all of its methods one by one by making use of the loop. 2) etl pipelines always involve transformation To convert a python function to a prefect task, you first need to make the necessary import —.

Building a Unified Data Pipeline with Apache Spark and XGBoost with Nan Here we will have two methods, etl() and etl_process(). Copy everything from 01_etl_pipeline.py, and you’re ready to go. Emp_df [‘app_tax’]=emp_df [‘sal’].apply (lambda x:. To set up the python etl pipeline, you’ll need to install the following modules: A data pipeline doesn�t always end with the loading.

I built a geospatial ETL pipeline with python and this is what I This will enable future pipeline steps to query data. Like with all types of analysis, there are always tradeoffs to be made and pros and cons of using particular techniques over others. Automating the etl pipeline the only thing that is remaining is, how to automate this pipeline so that even without human intervention, it runs once every day. Here’s.

GitHub BankNatchapol/ETLwithPythonpsycopg2 Create a ETL Pipeline When you have substantially larger dataframe objects to insert into your database, you can call the chunksize argument in to_gbq() to insert only a given amount of records at a time, say 10k at a time. Here�s an example of csv data on car sales: The full source code for this exercise is here. What we want to do with.

Apache Airflow — Programmatic platform for Data Pipelines and ETL/ELT You can use optional parameter with tag version. 1) etl pipelines are subset of the data pipeline. Initialize a created variable that stores when the database record was created. For example, calling write_to_json(output.json) will not execute the function, but store the output_filepath argument until the loader execution in a pipeline. Although our analysis has some advantages and is quite simplistic,.

My Technical Blogs EventDriven ETL job using Python on AWS And these mostly use the python scripting. 1) etl pipelines are subset of the data pipeline. What we want to do with our etl process is: # python modules import mysql.connector import pyodbc import fdb def etl(query, source_cnx, target_cnx): In your etl.py import the following python modules and variables to get started.

ETL pipeline on movie data using Python and postgreSQL Set the path for the target files: Unzip the file in a local folder. In your etl.py import the following python modules and variables to get started. It’s also very straightforward and easy to build a simple pipeline as a python script. Data engineers, data scientists, machine learning engineers and data analysts mostly use data.

ETL Pipeline for COVID19 data using Python and AWS DEV Community To build a stream processing etl pipeline with kafka, you need to: Extract the zip file and move the csv files for car_sales to your etl_car_sales directory, like this: The project is built in python and it has 2 main parts: Note that some of the fields won’t look “perfect” here — for example the time will still have brackets.

ETL Your Data Pipelines with Python and PostgreSQL by Sean Bradley The airflow dag file, dags/dagrun.py, which orchestrates the data pipeline tasks. This allows them to customize and control every aspect of the pipeline, but a handmade pipeline also requires more time and effort. What is an etl pipeline? In this project, we try to help one music streaming startup, sparkify, to move their data warehouse to a data lake. To.

Data Pipeline Implementation TrackIt Cloud Consulting & S/W Development Extract all of the fields from the split representation. The first step that you need to do is extract data from the source to kafka by using confluent jdbc connector or by writing custom codes that pull each record from the source and then write into kafka topic. Create a project called etl_car_sales with pycharm. Automating the etl pipeline the.

Computer Vision (Python OpenCV) With S4HANA Master Data Governance The first step that you need to do is extract data from the source to kafka by using confluent jdbc connector or by writing custom codes that pull each record from the source and then write into kafka topic. It’s also very straightforward and easy to build a simple pipeline as a python script. Etl tools and services allow enterprises.

Building a Simple ETL Pipeline with Python and Google Cloud Platform A data pipeline doesn�t always end with the loading. Use of lambda expression with apply () #default axis of apply is axis=0 and with this argument it works exactly like map. In order to set up the python etl script, follow the steps below: Note that some of the fields won’t look “perfect” here — for example the time will.

Create your first ETL Pipeline in Apache Spark and Python Adnan�s The airflow dag file, dags/dagrun.py, which orchestrates the data pipeline tasks. Like with all types of analysis, there are always tradeoffs to be made and pros and cons of using particular techniques over others. # python modules import mysql.connector import pyodbc import fdb def etl(query, source_cnx, target_cnx): What is an etl pipeline? Copy everything from 01_etl_pipeline.py, and you’re ready to.

I built a geospatial ETL pipeline with python and this is what I To set up the python etl pipeline, you’ll need to install the following modules: The standard execution of the function (with the data argument) is however still usable write_to_json(output.json, data=1.464). Example etl job using python. To convert a python function to a prefect task, you first need to make the necessary import — from prefect import task, and decorate any.

Python ETL Tools Best 8 Options. Want to do ETL with Python? Here are In your etl.py import the following python modules and variables to get started. A data pipeline doesn�t always end with the loading. Here we will have two methods, etl() and etl_process(). We will also redefine these labels for consistency. The project is built in python and it has 2 main parts:

Create a project called etl_car_sales with pycharm. Python ETL Tools Best 8 Options. Want to do ETL with Python? Here are.

python modules import mysql.connector import pyodbc import fdb # variables from variables import datawarehouse_name. What is an etl pipeline? The project is built in python and it has 2 main parts: Extract the zip file and move the csv files for car_sales to your etl_car_sales directory, like this: We will also redefine these labels for consistency. Here’s the updated version of our etl pipeline:

Automating the etl pipeline the only thing that is remaining is, how to automate this pipeline so that even without human intervention, it runs once every day. The standard execution of the function (with the data argument) is however still usable write_to_json(output.json, data=1.464). Let’s we establish etl pipeline. Python ETL Tools Best 8 Options. Want to do ETL with Python? Here are, Create a project called etl_car_sales with pycharm.